Ho-ho-ho! 🎅 The holiday season is here, and even the robots are taking a break from their 24/7 work shifts! 🤖💼 (Well, at least the ones who *don’t* live in the cloud.) May your Christmas be as glitch-free as a perfectly coded algorithm and your New Year as smooth as a freshly updated firmware! Please follow Robots-Blog also in 2025!

Helping Students Learn Python within a Familiar Coding Environment and at Their Own Pace

GREENVILLE, Texas, Dec. 9, 2024 /PRNewswire-PRWeb/ — VEX Robotics, a leader in K-12 STEM education, announces the launch of “Switch,” a revolutionary method for learning Computer Science. Switch is a research-based, patented feature within VEXcode, VEX Robotics’ coding platform for all its products. To date, VEXcode has offered students both block-based and python coding languages. With the introduction of Switch inside VEXcode, students can simplify their transition between these two languages by integrating Python commands directly within their block-based code.

Research has consistently shown that block-based coding is best for novice learners to begin programming. However, as students progress they are motivated by the authenticity and power of text-based coding. Research also shows that this transition, from blocks-based to text-based coding, is not trivial, and is often the reason students do not continue to study Computer Science. Switch provides educators with a new tool that fosters a deeper understanding of programming concepts.

Students can now learn Python syntax, editing, and writing at their own pace—all within the familiar block-based environment. Switch offers several key features to facilitate this learning process:

Switch’s scaffolded approach supports learners transitioning from block-based to text-based coding, building confidence and proficiency in a single, supportive environment. The development of Switch demonstrates VEX Robotics’ commitment to providing schools with programs that strengthen STEM education for students of all skill levels.

“Teaching Computer Science is important but also challenging,” said Jason McKenna, Vice President of Global Education Strategy. “Educators are seeking ways to teach programming in an approachable manner that allows students to transition from block-based to text-based coding. Switch is an innovative solution in our ongoing efforts to make STEM and Computer Science Education accessible to all students.”

In addition to facilitating a seamless transition from blocks to text-based coding, Switch assists students in the following key areas:

“Research conducted by our team offers empirical evidence for the effectiveness of Switch,” said Dr. Jimmy Lin, Director of Computer Science Education. “The findings contributed to our understanding of how to design environments that support students of varying experience levels and confidence in transitioning from blocks-based modalities to Python”

VEXcode with Switch is free and compatible with the following VEX Robotics platforms: IQ, EXP, V5, and CTE Workcell. Additionally, VEXcode with Switch is available with a subscription in VEXcode VR, an online platform that enables users to learn programming by coding Virtual Robots (VR) in interactive, video game-like environments. VEXcode with Switch is accessible on Chromebooks, Windows, and Mac computers.

“Throughout December, in celebration of Computer Science Education Week, we’re inviting everyone to try Switch with VEXcode VR or with their VEX hardware,” said Tim Friez, Vice President of Educational Technology. “Our new Hour of Code activities and resources enable students to explore Switch coding across both hardware and virtual platforms.”

Transitioning from blocks to text can be challenging, but with the patented Switch features, it doesn’t have to be.

Discover how Switch and VEXcode can empower your students to master Python at their own pace. Visit switch.vex.com to learn more.

The Avishkaar Game Builder is a platform specifically designed for young developers to enable them to create AI-powered games. This platform offers an intuitive user interface based on visual, block-based programming. This makes it easily accessible even for beginners who have no prior knowledge of programming. The platform not only encourages learning programming skills but also creativity and problem-solving skills.

A special feature of the Avishkaar Game Builder is the integration of AI elements into game development. Users can create games that respond to gestures or body movements, enabling an interactive and innovative gaming experience. This is supported by the AMS Play system that leverages advanced technologies such as motion detection.

The platform is primarily aimed at children aged 10 and above and offers numerous tutorials and learning resources to help them get started. Through this playful approach to technology, young people will not only learn the basics of game development but also advanced concepts such as artificial intelligence and animation.

Avishkaar Game League 2024

The Avishkaar Game League 2024 is an international competition aimed at young developers and encourages them to showcase their skills in game development. This year’s edition is themed ‚Rise of the Machines‘ and revolves around the theme ‚AI Becomes Self-Reliant‘. Participants are expected to develop games that incorporate the concept of AI getting out of control.

The competition aims to encourage creativity and innovation. Teams from different countries compete against each other using the Avishkaar Game Builder and the AMS Play system to develop their games. The competition provides a platform for collaboration and exchange between young developers worldwide.

Competition structure

– **Registration:** Registration for the Avishkaar Game League 2024 started on October 25, 2024.

– **Theme:** „Rise of the Machines“ – Participants develop games around the topic of AI.

– **Platform:** Participants use the AMS Play Game Builder to create their games.

– **Prizes:** In addition to trophies and medals, winners can also win gadgets, mentoring sessions and other valuable prizes.

Aims of the competition

The competition not only promotes technical knowledge in the areas of programming and AI, but also important soft skills such as teamwork, problem solving and creativity. By developing games, participants learn how to use modern technologies in a hands-on way.

The **Avishkaar Game Builder** offers children an exciting opportunity to immerse themselves in the world of game development while leveraging modern technologies such as AI. The **Avishkaar Game League 2024** complements this learning platform with an international competition that brings young talents together and gives them a stage to showcase their skills. Both initiatives help nurture future innovators and prepare them for a technological future.

To participate in the competition challenge free of charge, use the code „ROBOTSBLOG“

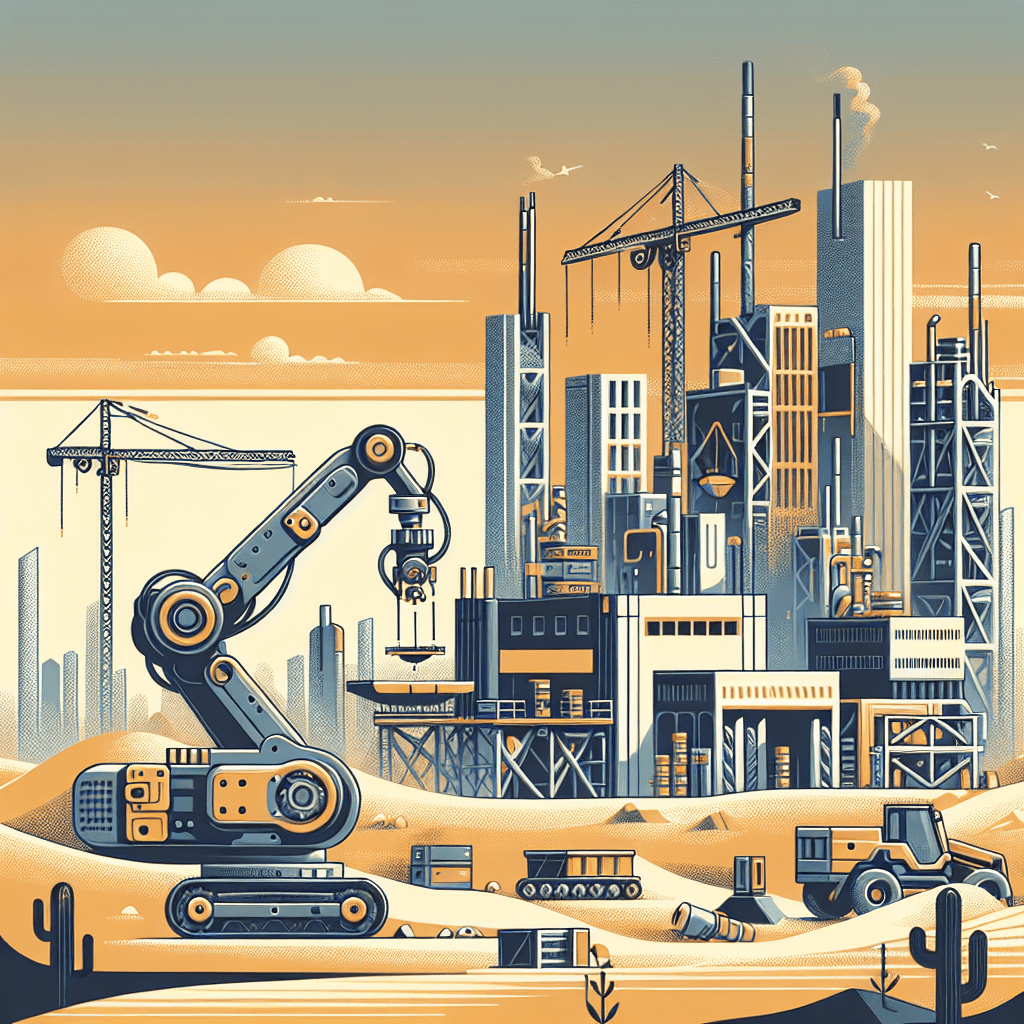

Robotics has quickly become pivotal across industries like manufacturing, health care and agriculture, transforming how work gets done. It boosts productivity and efficiency by taking on repetitive, precise and physically demanding tasks. This tech revolution is now entering construction, a sector known for its grueling physical demands and labor-intensive processes.

Autonomous robots are stepping up to handle critical jobs — from site inspections to heavy lifting — helping jobsites become safer, more efficient and more precise. This shift is fundamental to an industry where advanced robotics and human expertise work together, reimagining what’s possible on the modern jobsite.

Autonomous robots are making serious headway in the construction industry. Still, only about 55% of the sector globally is currently harnessing their potential. For companies that have embraced this technology, these devices have already tackled some of the most crucial and challenging tasks on jobsites.

For example, drones can handle aerial inspections, create detailed site maps and monitor progress with remarkable accuracy and speed. Meanwhile, specialized robots enhance bricklaying into a faster, more precise process. Moreover, large-scale 3D printing machines can construct entire structures, revolutionizing project planning and execution.

As these technologies improve, robots increasingly take on the larger and more complex tasks that once required intense physical labor. From foundational work to finishing touches, construction robots‘ capabilities are expanding rapidly. They manage these high-stakes jobs to reshape the industry and set new speed, safety and efficiency standards on modern jobsites.

This movement toward automation opens doors to a construction landscape where advanced technology and skilled labor work in tandem. It paves the way for more ambitious projects and a more streamlined building process.

Robots come in many shapes and sizes, each designed to handle specific tasks that enhance precision. From ground-level operations to overhead site monitoring, these machines help employees plan, manage and execute projects. Here’s a look at some of the types of autonomous robots making a difference on jobsites today:

Each type of robot plays a specialized role, showing how automation can address the unique demands of construction and reshape what’s possible on jobsites.

Robots benefit construction projects, especially regarding efficiency, precision and safety. Unlike human crews, these devices can work continuously to help reduce project timelines and keep everything on schedule. With their high level of accuracy, they minimize errors and waste, which also reduces costly rework and makes each project more resource-efficient.

Perhaps most importantly, automation makes construction sites safer. For example, taking over repetitive and physically taxing tasks helps prevent injuries related to repetitive stress and fatigue. This creates a safer work environment where human workers can focus on tasks requiring skill and oversight.

The rise of robotics in construction transforms job roles, shifting the focus from hands-on labor to more specialized oversight and technical maintenance. As robots handle demanding tasks like heavy lifting, bricklaying and site inspections, the U.S. construction industry is expected to need around 500,000 new workers in 2024 to meet project demands.

Robotics could help address this gap while introducing a tech-driven shift on jobsites. Although concerns about job displacement are natural, experts see robots and humans as a powerful team, each enhancing the other’s strengths. Machines can tackle repetitive, high-risk jobs to make sites safer. Meanwhile, human workers focus on decision-making, creativity and complex problem-solving. This collaboration helps build a smarter, safer workforce.

Over the next decade, construction robotics can make major leaps forward. In fact, experts predict the global market for these technologies could exceed $242 million by 2030. Emerging tools like AI integration and enhanced sensors are expected to bring new levels of functionality to robots. They allow them to analyze data, adapt to changing environments and make intelligent decisions to boost precision and productivity on jobsites.

These advancements may lead to fully autonomous construction sites, where robots manage nearly every aspect of the build, from digging foundations to applying finishing touches. Such a shift would slash project timelines and improve safety by reducing human exposure to high-risk tasks. It also sets the stage for an industry that combines cutting-edge automation with human oversight to deliver faster, safer and more efficient projects than ever before.

While robots bring tremendous benefits to construction sites, they also open up new roles for workers in areas like oversight, programming and maintenance. However, these advancements come with challenges, which require workers to adapt to a tech-driven environment and develop specialized skills to thrive alongside automation.

Guest article by Ellie Gabel. Ellie is a writer living in Raleigh, NC. She's passionate about keeping up with the latest innovations in tech and science. She also works as an associate editor for Revolutionized.After a string of successful Kickstarter campaigns, Geek Club and CircuitMess have teamed up once again to launch an exciting new project: an AI-powered desk robot designed especially for Rick and Morty fans.

Zagreb, Croatia – November 2024 – Geek Club and CircuitMess are thrilled to announce the official launch of their Kickstarter campaign for the Butter Bot, a revolutionary AI-powered robot inspired by the one made famous in the popular Adult Swim series, Rick and Morty, and first seen in season 1 episode 9. This innovative product is officially licensed by Warner Bros. Discovery Global Consumer Products (WBDGCP), bringing the iconic Butter Bot to life in a way fans have never seen before.

From Screen to Reality: A Partnership That Is Bringing Butter Bot to Life

A shared love of Rick and Morty show between CircuitMess and Geek Club teams led to this project. Both companies saw an opportunity to bring a beloved character to life in a way that was both true to the show and technologically innovative. They chose the Butter Bot because of its iconic status and potential for interactive, educational, and entertaining applications. By turning a simple butter-passing robot into a multifunctional AI companion, they aimed to create a product that would resonate with fans and tech enthusiasts alike, merging humor and functionality seamlessly.

From Mars to Multiverse: The Next Chapter for CircuitMess and Geek Club

The collaboration was a logical union of the two companies after the massive success of the previous Kickstarter – NASA Perseverance AI-powered Mars Rover. Both companies create educational STEM DIY kits for kids and adults in order to make learning STEM skills easy and fun. While CircuitMess is focused primarily on toys, Geek Club’s products are always robot and space-themed.

„Partnering with WBDGCP on this project has been an exciting next step in our collaboration with Circtuit Mess, says Nico, co-founder of Geek Club, sharing his enthusiasm: “The Butter Bot is a testament to what happens when creativity, technology, and fandom collide. We’re excited to see the support and engagement from the community as we launch our Kickstarter campaign.“

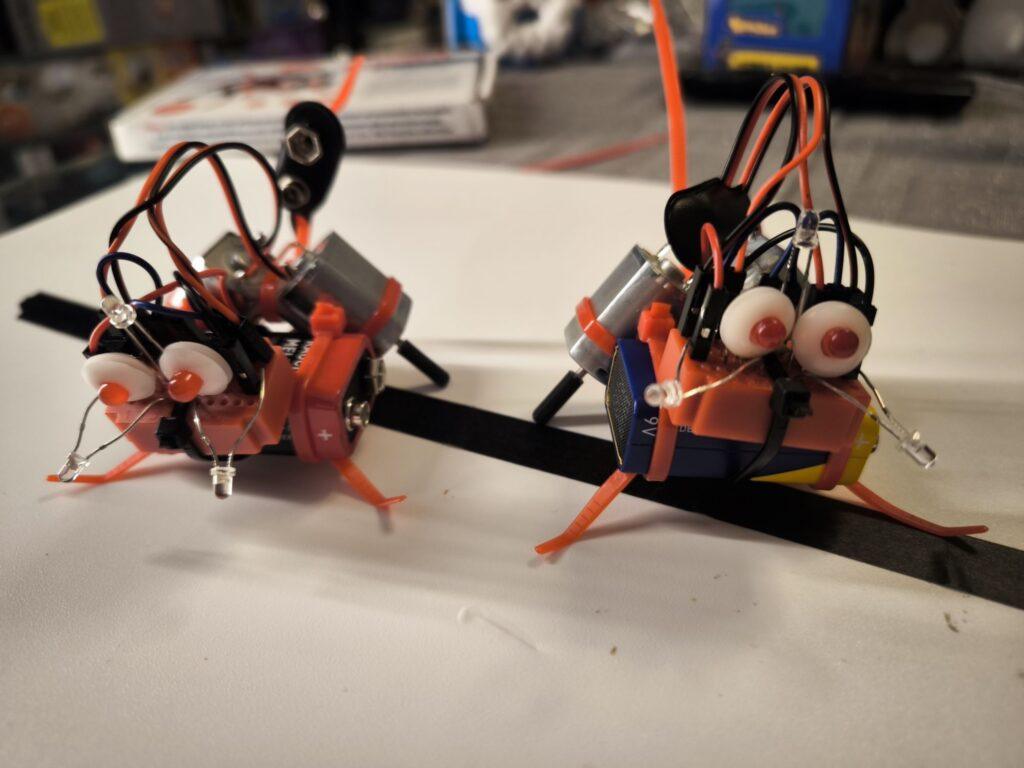

Geek Club is an American company that specializes in designing and producing DIY robotics kits that educate their users on soldering and electronics. They focus primarily on space exploration and robotics, all to make learning engineering skills easy and fun for their young and adult audience.

Beyond Butter: Introducing the AI-Powered Butter Bot

The Butter Bot is not just a simple robot with the sole purpose of passing the butter, as originally in the show. It comes with a unique remote controller that allows for precise maneuvering of its movement while also providing a live feed from the bot’s camera. The interactive robot has advanced AI capabilities, making it a versatile companion in any setting.

„Bringing the Butter Bot from Rick and Morty into the real world has been an incredible journey,” says Albert Gajšak, CEO of CircuitMess, expressing his excitement about the launch: “We’ve poured our hearts into creating a product that not only honors the original character but also adds a whole new level of interactivity and functionality. We can’t wait to see how fans will use and enjoy it.“

To this day, CircuitMess has developed numerous educational products that encourage kids and adults to create rather than just consume. They successfully delivered 7 Kickstarter campaigns and made thousands of geeks worldwide extremely happy. CircuitMess‘ kits are a unique blend of resources for learning about hardware and software in a fun and exciting way.

The Butter Bot Kickstarter campaign offers backers exclusive early-bird pricing and special rewards.

For more information and to back the project, visit the Butter Bot Kickstarter page here (https://www.kickstarter.com/projects/albertgajsak/rick-and-mortytm-butter-bot-an-ai-powered-desk-robot), or check out the Geek Club and CircuitMess websites to see all their other kits.

About Geek Club

Geek Club is a crew of designers and engineers led by co-founders Nicolas Deladerrière and Nikita Potrashilin. Their mission is to build electronic construction kits for curious minds in over 70 countries worldwide. They are most often inspired by NASA and other Space Agencies of the world and hope to push their fans’ minds further, strengthen their skills, and advance their knowledge of electronics and Space.

Their vision is to create a world of inventors for the next generation of technology with their space-themed STEM kits. Find out more at www.geekclub.com.

Nicolas Deladerrière, co-founder of Geek Club

Mail: [email protected]

About CircuitMess

CircuitMess is a technology startup founded in 2017 by Albert Gajšak and Tomislav Car after a successful Kickstarter campaign for MAKERbuino.

CircuitMess employs young, ambitious people and has recently moved to a new office in Croatia’s capital, Zagreb, searching for talented individuals who will help them create unique electronic products and bring technology to the crowd in a fun and exciting way. Find out more at www.circuitmess.com.

Albert Gajšak, co-founder and CEO of CircuitMess

Mail: [email protected]

About Rick and Morty

Rick and Morty is the Emmy® award-winning half-hour animated hit comedy series on Adult Swim that follows a sociopathic genius scientist who drags his inherently timid grandson on insanely dangerous adventures across the universe. Rick Sanchez is living with his daughter Beth’s family and constantly bringing her, his son-in-law Jerry, granddaughter Summer, and grandson Morty into intergalactic escapades.

Rick and Morty stars Ian Cardoni, Harry Belden, Sarah Chalke, Chris Parnell, and Spencer Grammer.

About Warner Bros. Discovery Global Consumer Products

Warner Bros. Discovery Global Consumer Products (WBDGCP), part of Warner Bros. Discovery’s Revenue & Strategy division, extends the company’s powerful portfolio of entertainment brands and franchises into the lives of fans around the world. WBDGCP partners with best-in-class licensees globally on award-winning toy, fashion, home décor and publishing programs inspired by the biggest franchises from Warner Bros.’ film, television, animation, and games studios, HBO, Discovery, DC, Cartoon Network, HGTV, Eurosport, Adult Swim, and more. With innovative global licensing and merchandising programs, retail initiatives, and promotional partnerships, WBDGCP is one of the leading licensing and retail merchandising organizations in the world.

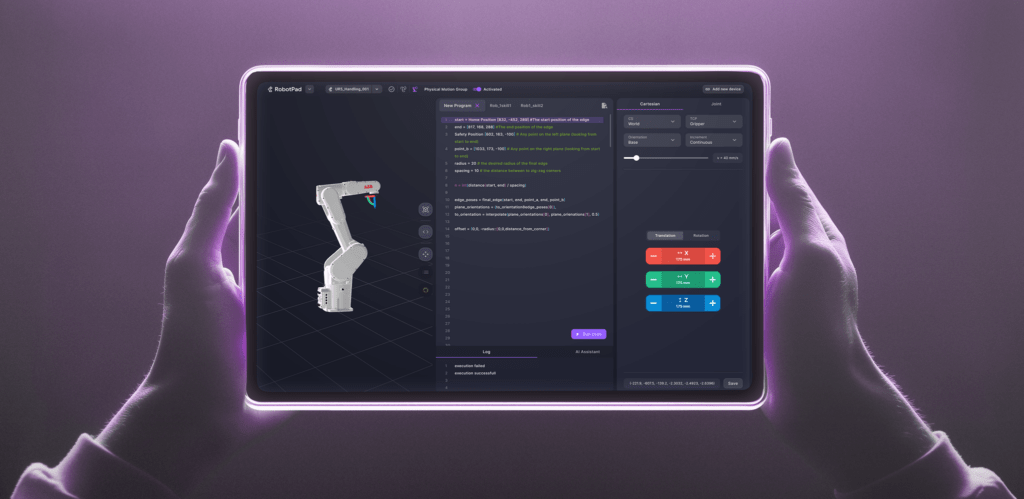

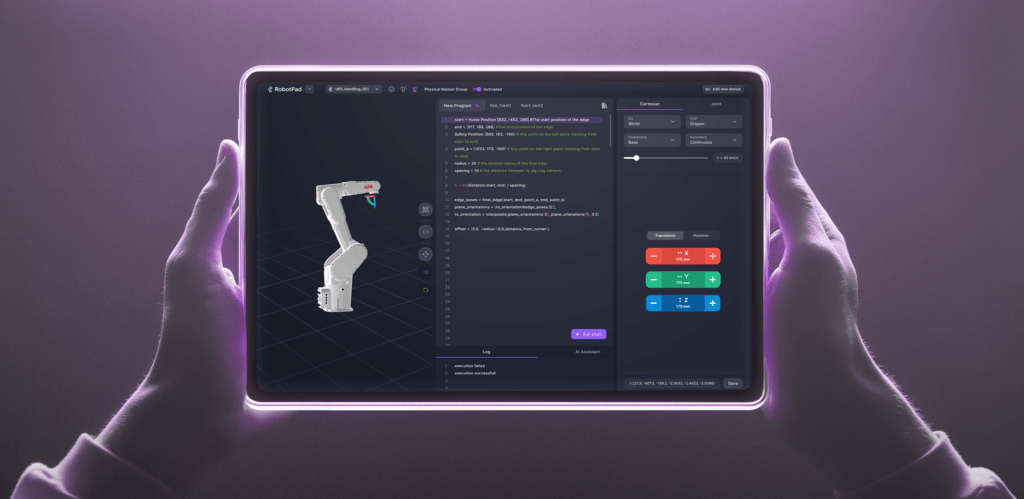

Dresden, November 6th, 2024 – Last night at its exclusive launch event in Dresden, Wandelbots unveiled the world’s first agnostic operating system specifically developed for industrial robotic automation. With NOVA, a new era of automation begins, setting new standards in efficiency, accessibility, and innovation.

„With NOVA, we are witnessing a new dawn in industrial automation,“ said Christian Piechnick, CEO of Wandelbots. „Just as Android revolutionized smartphones and Windows transformed the PC world, NOVA will make industrial robotics accessible to everyone and create new ways for software developers to commercialize.“

Carl Doeksen, Global Robotics / Automation Director for Abrasive Systems at 3M states: „At 3M, we collaborate with startups, to augment our innovation model. We’re proud to be part of Wandelbots‘ journey to democratize robotics. Today, the vast majority of the abrasive applications are still done manually. Wandelbots NOVA will lower the barrier for companies to automate these types of processes. With NOVA, users are empowered with the process know-how they need to select the right abrasive materials; they can easily input the parameters for their workpiece and specify the desired final state using a simple UI. For 3M, NOVA provides an opportunity—not only to equip end-users with the tools they need, but to make our extensive expertise in process technology more accessible.“

Modern Programming Languages and Vendor Independence

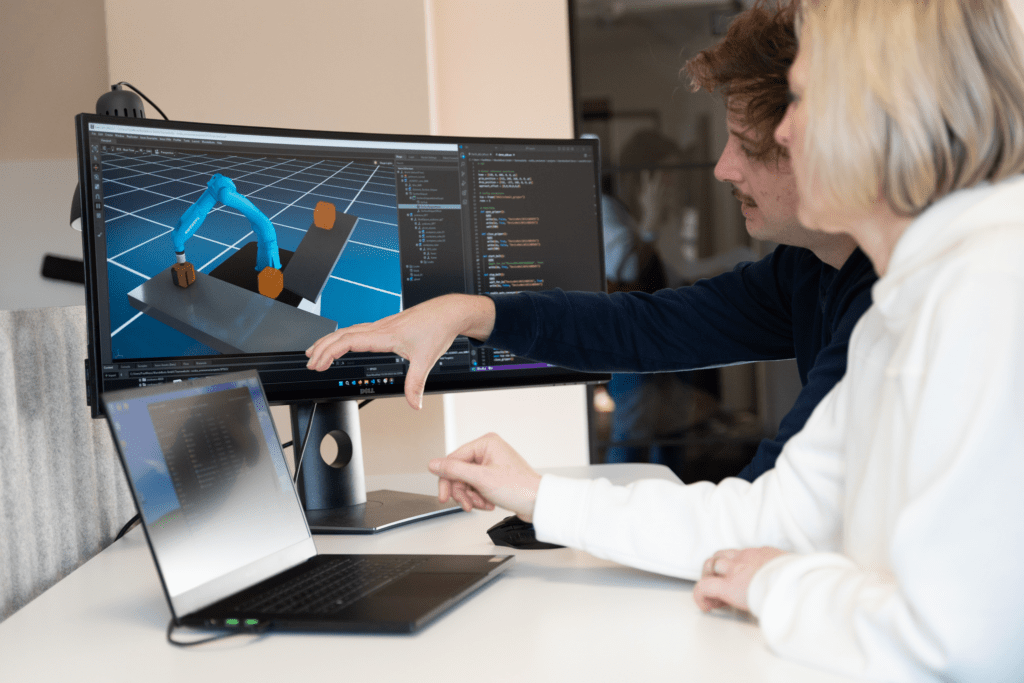

Wandelbots NOVA is the world’s first agnostic operating system for robots, designed to make robotics accessible to everyone. By supporting modern development tools like Python and JavaScript, NOVA empowers millions of developers to create and scale robotic applications with ease, reducing the complexity of automation. Following a Plan, Build, and Operate approach, NOVA simplifies the entire automation lifecycle with AI technologies at its core—from planning and simulation to deployment and scaling—ensuring continuous support throughout each phase. Its seamless integration with existing hardware allows businesses to leverage past investments while scaling across multiple robots and brands, without costly retooling—accelerating projects, lowering costs, and increasing flexibility. Wandelbots’ close collaboration with Microsoft and OpenAI ensures scalability and that the latest and greatest AI capabilities enhance NOVA’s feature set.

As a vendor-independent operating system, NOVA simplifies and optimizes the complex world of industrial robotics by integrating hardware components from various manufacturers and making them accessible to everyone using through a modern interface. Complex programming and inflexible automation landscapes are now things of the past. NOVA’s open API concept, unique user experience, and seamless integration of externally developed apps make it a versatile platform for both robot users and millions of software developers.

Proprietary and outdated programming languages, high operating costs, a lack of skilled labor, and closed ecosystems have so far prevented the full realization of robotics‘ enormous potential—especially in small- and medium-sized enterprises, where robotics is still a niche topic. NOVA, as a unified operating system, will significantly advance robotics by enabling easy programming and control of robots from different manufacturers without the need for specialized expertise. This reduces operating costs, speeds up innovation processes, and eliminates the dependence on hard-to-find skilled personnel. Especially for SMEs, where the current level of automation is still in the single digits, NOVA opens up entirely new perspectives.

An Open Ecosystem for Software Developers

Wandelbots NOVA is much more than just an operating system. It offers a unified user interface for all robot manufacturers and models. Software developers can access development tools, UI elements, standardized libraries, and sample applications through the dedicated developer portal. Using the Python-based programming language „Wandelscript,“ they can create innovative solutions for interacting with robots and share their experiences within the community specifically created for this purpose.

A New Era of Automation

The launch of NOVA marks a turning point. It opens up new opportunities for companies to leverage automation. In addition, software developers, who currently create apps or websites, can now unleash their creativity in a completely new industry.

How NOVA Supports Companies

Wandelbots NOVA was developed with four key objectives to support companies:

Addressing the Labor Shortage and Simplifying Robotics

One major advantage of NOVA is its ability to counter the growing shortage of skilled robot programmers and production workers. Through intuitive frontend applications and a unified user interface, NOVA makes it easier than ever for employees to interact with robots without requiring technical knowledge. A modern and unified programming language also opens up a completely new field of activity for millions of software developers, thus strengthening the industry’s innovative capacity.

Investment Protection Thanks to Realistic Simulation with Digital Twins

Future automation systems can be tested for functionality before costly hardware equipment is purchased. The integration of Wandelbots NOVA with the NVIDIA Omniverse platform empowers developers and operators to create physically accurate digital twins of robots, factories, and movable workpieces for simulation before real-world deployment. This seamless integration delivers consistency between the digital twin and the physical automation cell throughout their entire lifecycle, which can enhance efficiency and reduce potential errors.

Wandelbots NOVA – Beta Phase Starts Now

Since June, the first approximately 50 customers have been able to experience the advantages of Wandelbots NOVA in the closed beta phase. Now, Wandelbots is making its operating system available to more users in the open beta phase. Interested users can register at https://www.wandelbots.com/developers-beta

Learn More at the SPS Trade Fair

We warmly invite you to see NOVA yourself and test it live at the SPS from November 12th to 14th: https://www.wandelbots.com/sps You can find us in Hall 6, Stand 248. Feel free to contact us at [email protected] to schedule an appointment. We are also happy to be available for interviews on-site.

In short:

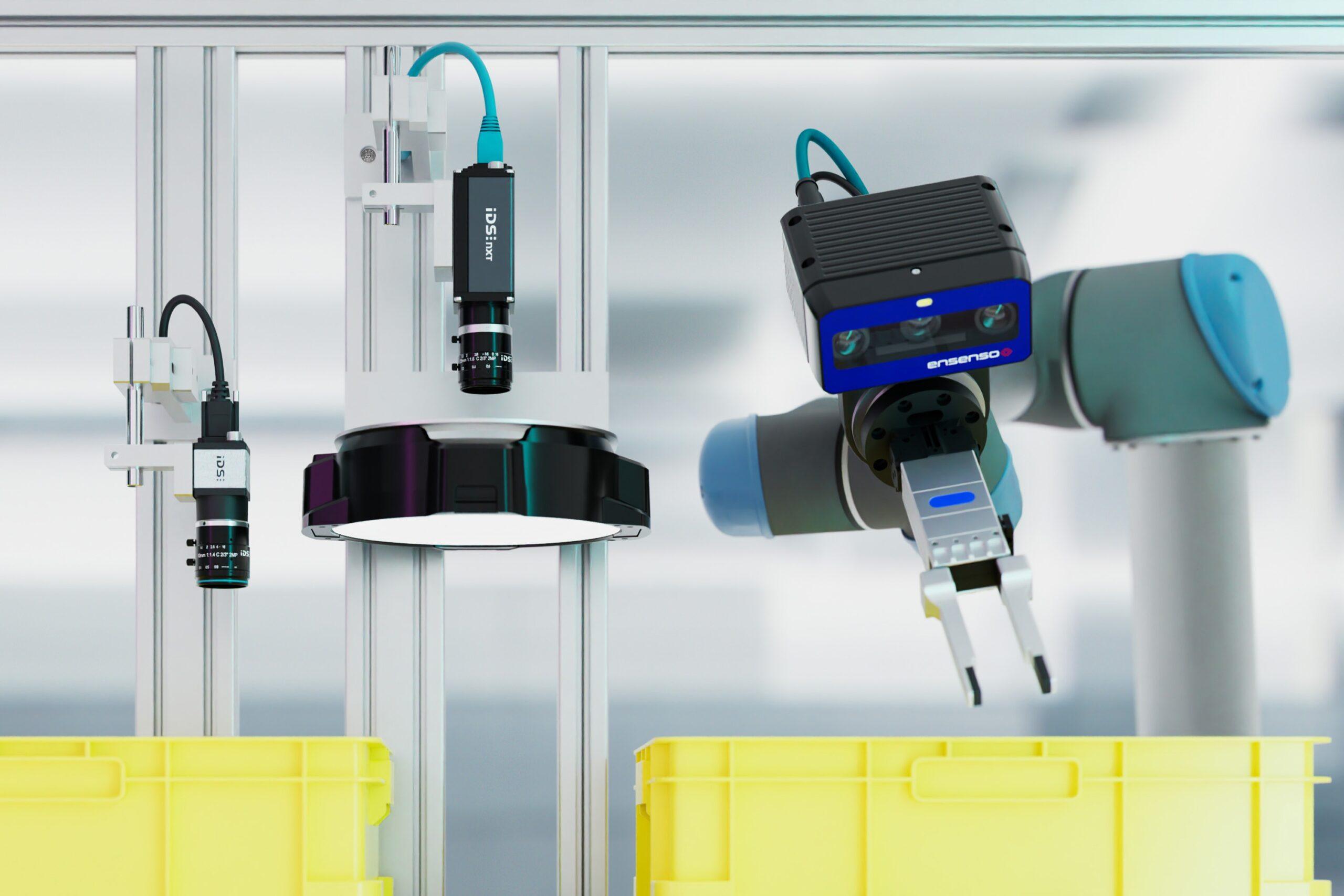

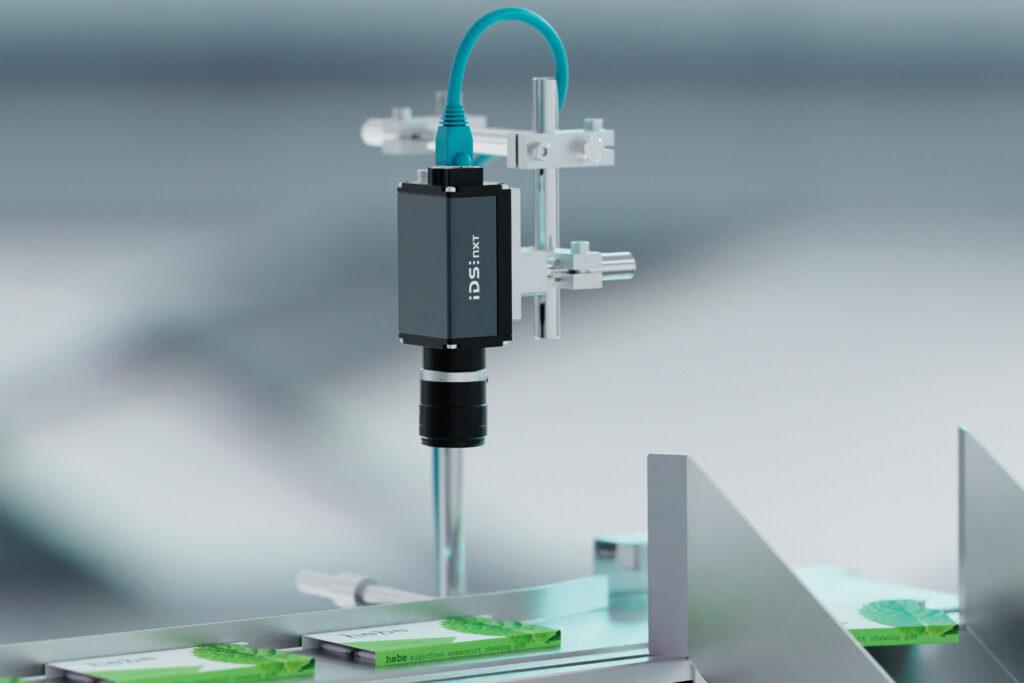

Innovative Industrial Cameras for Robotics and Process Automation to Take Center Stage at Stand 6-36

Detecting the smallest errors, increasing throughput rates, preventing wear – industrial cameras provide important information for automated processes. IDS will be demonstrating which technologies and products are particularly relevant at SPS / Smart Production Solutions in Nuremberg, Germany, from 12 to 14 November. If you want to experience how small cameras can achieve big things, stand 6-360 is the right place to visit.

Around 1,200 companies will be represented in a total of 16 exhibition halls at the trade fair for smart and digital automation. IDS will be taking part for the first time, focussing on industrial image processing for robotics, process automation and networked systems. Philipp Ohl, Head of Product Management at IDS, explains: „Automation and cameras? They go together like a lock and key. Depending on the task, very different qualities are required – such as particularly high-resolution images, remarkably fast cameras or models with integrated intelligence.“ Consequently, the products and demo systems that the company will be showcasing at SPS are highly diverse.

The highlights of IDS can be divided into three categories: 2D, 3D and AI-based image processing. The company will be presenting uEye Live, a newly developed product line. These industrial-grade monitoring cameras enable live streaming and are designed for the continuous monitoring and documentation of processes. IDS will also be introducing a new event-based sensor that is recommended for motion analyses or high-speed counting. It enables the efficient detection of rapid changes through continuous pixel-by-pixel detection instead of the usual sequential image-by-image analysis.

In the 3D cameras product segment, IDS will be demonstrating the advantages of the new stereo vision camera Ensenso B for precise close-range picking tasks as well as a prototype of the first time-of-flight camera developed entirely in-house. Anyone interested in robust character recognition will also find what they are looking for at the trade fair stand: Thanks to DENKnet OCR, texts and symbols on surfaces can be reliably identified and processed. IDS will be exhibiting at SPS, stand 6-360.

More information: https://en.ids-imaging.com/sps-2024.html