Unitree Quadruped Go 1 Dance Video 1+2. Find the latest News on robots, drones, AI, robotic toys and gadgets at robots-blog.com. If you want to see your product featured on our Blog, Instagram, Facebook, Twitter or our other sites, contact us. #robots #robot #omgrobots #roboter #robotic #automation #mycollection #collector #robotsblog #collection #botsofinstagram #bot #robotics #robotik #gadget #gadgets #toy #toys #drone #robotsofinstagram #instabots #photooftheday #picoftheday #followforfollow #instadaily #werbung #unitree #quadruped #robotics #mybotshop #dog #dance @mybotshop @unitreerobotics

Archiv der Kategorie: UGV

Robots-Blog wishes a merry X-Mas and a happy new year!

Robots-Blog wishes a merry X-Mas and a happy new year! Thanks @mybotshopgermany for lending the Unitree Quadruped Go1 robot! Find the latest News on robots, drones, AI, robotic toys and gadgets at robots-blog.com #robots #robot #omgrobots #roboter #robotic #mycollection #collector #robotsblog #collection #botsofinstagram #bot #robotics #robotik #gadget #gadgets #toy #toys #drone #photooftheday #picoftheday #followforfollow #drones #timelapse #robotbuilder #robotexpert #robotmaker #robotsofyoutube #cobots #cobot #automation #werbung #unitree #quadruped #mindstorms #mindstormsmagic #xmas #christmas #lego #unitree #unitreerobotics @unitreerobotics7482 @unitreesupport5358 @mybotshopgermany2757 @LEGO @LEGOEducation

Keep moving

Communication & Safety Challenges Facing Mobile Robots Manufacturers

Mobile robots are everywhere, from warehouses to hospitals and even on the street. Their popularity is easy to understand; they’re cheaper, safer, easier to find, and more productive than actual workers. They’re easy to scale or combine with other machines. As mobile robots collect a lot of real-time data, companies can use mobile robots to start their IIoT journey.

But to work efficiently, mobile robots need safe and reliable communication. This article outlines the main communication and safety challenges facing mobile robot manufacturers and provides an easy way to overcome these challenges to keep mobile robots moving.

What are Mobile Robots?

Before we begin, let’s define what we mean by mobile robots.

Mobile robots transport materials from one location to another and come in two types, automated guided vehicles (AGVs) and autonomous mobile robots (AMRs). AGVs use guiding infrastructure (wires reflectors, reflectors, or magnetic strips) to follow predetermined routes. If an object blocks an AGV’s path, the AGV stops and waits until the object is removed.

AMRs are more dynamic. They navigate via maps and use data from cameras, built-in sensors, or laser scanners to detect their surroundings and choose the most efficient route. If an object blocks an AMR’s planned route, it selects another route. As AMRs are not reliant on guiding infrastructure, they’re quicker to install and can adapt to logistical changes.

What are the Communication and Safety Challenges Facing Mobile Robot Manufacturers?

1. Establish a Wireless Connection

The first challenge for mobile robot manufacturers is to select the most suitable wireless technology. The usual advice is to establish the requirements, evaluate the standards, and choose the best match. Unfortunately, this isn’t always possible for mobile robot manufacturers as often they don’t know where the machine will be located or the exact details of the target application.

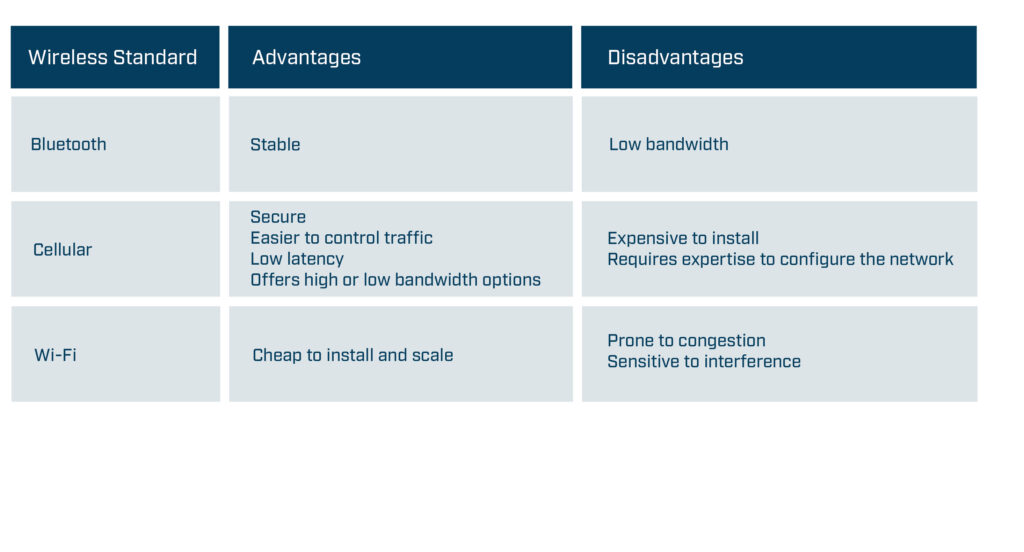

Sometimes a Bluetooth connection will be ideal as it offers a stable non-congested connection, while other applications will require a high-speed, secure cellular connection. What would be useful for mobile robot manufacturers is to have a networking technology that’s easy to change to meet specific requirements.

Wireless standard -high-level advantages and disadvantages

The second challenge is to ensure that the installation works as planned. Before installing a wireless solution, complete a predictive site survey based on facility drawings to ensure the mobile robots have sufficient signal coverage throughout the location. The site survey should identify the optimal location for the Access Points, the correct antenna type, the optimal antenna angle, and how to mitigate interference. After the installation, use wireless sniffer tools to check the design and adjust APs or antenna as required.

2. Connecting Mobile Robots to Industrial Networks

Mobile robots need to communicate with controllers at the relevant site even though the mobile robots and controllers are often using different industrial protocols. For example, an AGV might use CANopen while the controller might use PROFINET. Furthermore, mobile robot manufacturers may want to use the same AGV model on a different site where the controller uses another industrial network, such as EtherCAT.

Mobile robot manufacturers also need to ensure that their mobile robots have sufficient capacity to process the required amount of data. The required amount of data will vary depending on the size and type of installation. Large installations may use more data as the routing algorithms need to cover a larger area, more vehicles, and more potential routes. Navigation systems such as vision navigation process images and therefore require more processing power than installations using other navigation systems such as reflectors. As a result, mobile robot manufacturers must solve the following challenges:

- They need a networking technology that supports all major fieldbus and industrial Ethernet networks.

- It needs to be easy to change the networking technology to enable the mobile robot to communicate on the same industrial network as the controller without changing the hardware design.

- They need to ensure that the networking technology has sufficient capacity and functionality to process the required data.

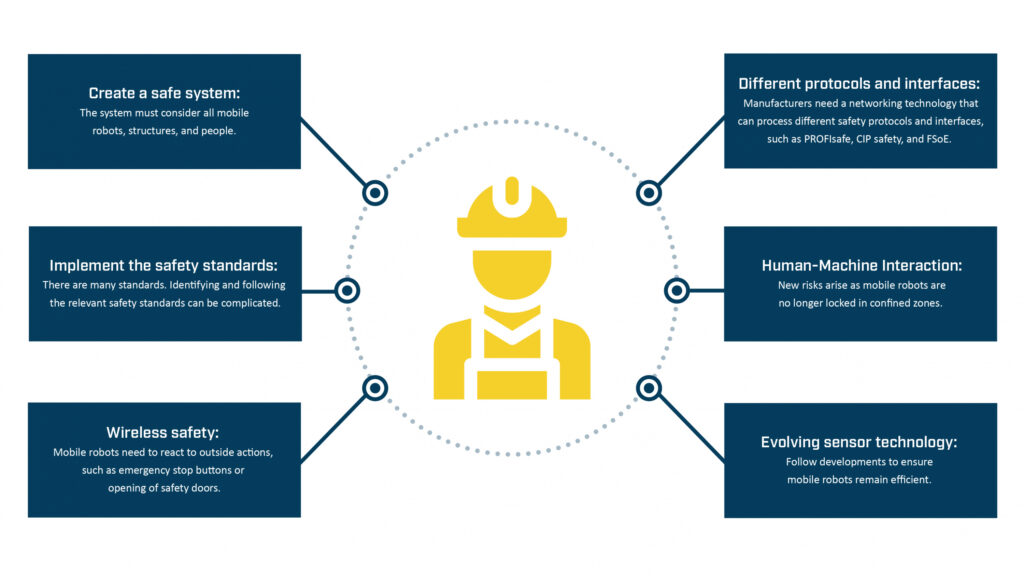

3. Creating a Safe System

Creating a system where mobile robots can safely transport material is a critical but challenging task. Mobile robot manufacturers need to create a system that considers all the diverse types of mobile robots, structures, and people in the environment. They need to ensure that the mobile robots react to outside actions, such as someone opening a safety door or pushing an emergency stop button, and that the networking solution can process different safety protocols and interfaces. They need to consider that AMRs move freely and manage the risk of collisions accordingly. The technology used in sensors is constantly evolving, and mobile robot manufacturers need to follow the developments to ensure their products remain as efficient as possible.

Overview of Safety Challenges for Mobile Robot Manufacturers

Safety Standards

The safety standards provide guidelines on implementing safety-related components, preparing the environment, and maintaining machines or equipment.

While compliance with the different safety standards (ISO, DIN, IEC, ANSI, etc.) is mostly voluntary, machine builders in the European Union are legally required to follow the safety standards in the machinery directives. Machinery directive 2006/42/EC is always applicable for mobile robot manufacturers, and in some applications, directive 2014/30/EU might also be relevant as it regulates the electromagnetic compatibility of equipment. Machinery directive 2006/42/EC describes the requirements for the design and construction of safe machines introduced into the European market. Manufacturers can only affix a CE label and deliver the machine to their customers if they can prove in the declaration of conformity that they have fulfilled the directive’s requirements.

Although the other safety standards are not mandatory, manufacturers should still follow them as they help to fulfill the requirements in machinery directive 2006/42/EC. For example, manufacturers can follow the guidance in ISO 12100 to reduce identified risks to an acceptable residual risk. They can use ISO 13849 or IEC 62061 to find the required safety level for each risk and ensure that the corresponding safety-related function meets the defined requirements. Mobile robot manufacturers decide how they achieve a certain safety level. For example, they can decrease the speed of the mobile robot to lower the risk of collisions and severity of injuries to an acceptable level. Or they can ensure that mobile robots only operate in separated zones where human access is prohibited (defined as confined zones in ISO 3691-4).

Identifying the correct standards and implementing the requirements is the best way mobile manufacturers can create a safe system. But as this summary suggests, it’s a complicated and time-consuming process.

4. Ensuring a Reliable CAN Communication

A reliable and easy-to-implement standard since the 1980s, communication-based on CAN technology is still growing in popularity, mainly due to its use in various booming industries, such as E-Mobility and Battery Energy Storage Systems (BESS). CAN is simple, energy and cost-efficient. All the devices on the network can access all the information, and it’s an open standard, meaning that users can adapt and extend the messages to meet their needs.

For mobile robot manufacturers, establishing a CAN connection is becoming even more vital as it enables them to monitor the lithium-ion batteries increasingly used in mobile robot drive systems, either in retrofit systems or in new installations. Mobile robot manufacturers need to do the following:

1.Establish a reliable connection to the CAN or CANopen communication standards to enable them to check their devices, such as monitoring the battery’s status and performance.

2. Protect systems from electromagnetic interference (EMI), as EMI can destroy a system’s electronics. The risk of EMI is significant in retrofits as adding new components, such as batteries next to the communication cable, results in the introduction of high-frequency electromagnetic disturbances.

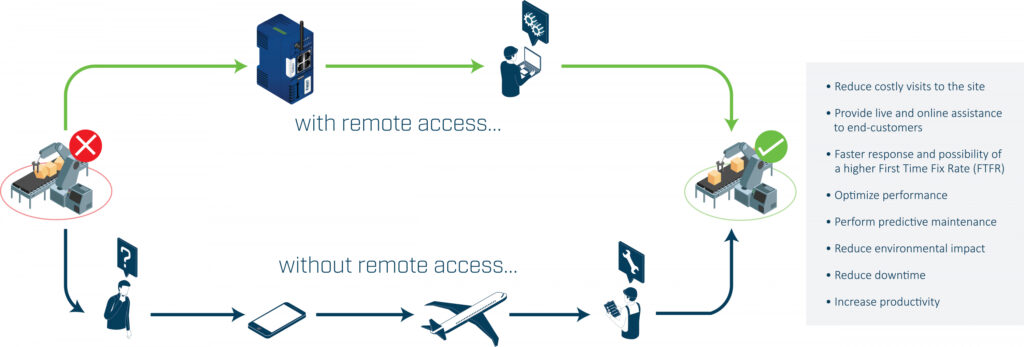

5. Accessing Mobile Robots Remotely

The ability to remotely access a machine’s control system can enable mobile robot vendors or engineers to troubleshoot and resolve most problems without traveling to the site.

Benefits of Remote Access

The challenge is to create a remote access solution that balances the needs of the IT department with the needs of the engineer or vendor.

The IT department wants to ensure that the network remains secure, reliable, and retains integrity. As a result, the remote access solution should include the following security measures:

- Use outbound connections rather than inbound connections to keep the impact on the firewall to a minimum.

- Separate the relevant traffic from the rest of the network.

- Encrypt and protect all traffic to ensure its confidentiality and integrity.

- Ensure that vendors work in line with or are certified to relevant security standards such as ISO 27001

- Ensure that suppliers complete regular security audits.

The engineer or vendor wants an easy-to-use and dependable system. It should be easy for users to connect to the mobile robots and access the required information. If the installation might change, it should be easy to scale the number of robots as required. If the mobile robots are in a different country from the vendors or engineers, the networking infrastructure must have sufficient coverage and redundancy to guarantee availability worldwide.

Conclusion

As we’ve seen, mobile robot manufacturers must solve many communication and safety challenges. They must establish a wireless connection, send data over different networks, ensure safety, connect to CAN systems, and securely access the robots remotely. And to make it more complicated, each installation must be re-assessed and adapted to meet the on-site requirements.

Best practice to implement mobile robot communication

Mobile robot manufacturers are rarely communication or safety experts. Subsequently, they can find it time-consuming and expensive to try and develop the required communication technology in-house. Enlisting purpose-built third-party communication solutions not only solves the communication challenges at hand, it also provides other benefits.

Modern communication solutions have a modular design enabling mobile robot manufacturers to remove one networking product designed for one standard or protocol and replace it with a product designed for a different standard or protocol without impacting any other part of the machine. For example, Bluetooth may be the most suitable wireless standard in one installation, while Wi-Fi may provide better coverage in another installation. Similarly, one site may use the PROFINET and PROFIsafe protocols, while another may use different industrial and safety protocols. In both scenarios, mobile robot manufacturers can use communication products to change the networking technology to meet the local requirements without making any changes to the hardware design.

Authors:

Mark Crossley, Daniel Heinzler, Fredrik Brynolf, Thomas Carlsson

HMS Networks

HMS Networks is an industrial communication expert based in Sweden, providing several solutions for AGV communication. Read more on www.hms-networks.com/agv

AGILOX introduces new ODM robot

AGILOX expands its product portfolio with an intelligent dolly mover

After AGILOX ONE and AGILOX OCF, now comes AGILOX ODM. The company, which specializes in logistics robots, is adding an autonomous dolly mover to its range of intelligent transport systems controlled by swarm intelligence. AGILOX is thus targeting a completely new area of application: the transport of small load carriers.

AGILOX is expanding its range of Autonomous Mobile Robots (AMRs) with the new Omnidirectional Dolly Mover AGILOX ODM. While the AGILOX ONE is equipped with a scissor lift and the AGILOX OCF has a free lift for load handling, the AGILOX ODM is built on the principle of a dolly mover. This means it can accept loads with a maximum weight of 300 kg to a maximum lifting height of 250 mm and transport them to their destination. The intelligent AMR concept with AGILOX X-SWARM technology thus opens up new areas of application and other industry segments because small load carriers (such as totes), which the new AGILOX ODM is designed to transport, are widely used, especially in the electronics and pharmaceutical industries.

Details of the new AGILOX ODM

With the AGILOX ODM, AGILOX has brought new thinking to the concept of Automated Guided Vehicles: The compact vehicle travels autonomously and navigates freely on the production floor or in the warehouse, perfectly ensuring the in-house material flow. Just like AGILOX ONE and AGILOX OCF, AGILOX ODM uses an omnidirectional drive concept. This allows it to travel transversely into rack aisles as well as turn on the spot, enabling it to maneuver even in the tightest of spaces. The lithium iron phosphate (LiFePO4) accumulator ensures short charge times and long operating cycles.

“AGILOX is a brand that has built a strong foundation with the AGILOX ONE and the AGILOX OCF. With the new AGILOX ODM, we remain true to our brand DNA while simultaneously targeting the transport of small load carriers to support our growth plan to become the world’s leading AMR provider,” says Georg Kirchmayr, CEO of AGILOX Services GmbH.

The AGILOX advantage

With the new AGILOX ODM, customers can benefit from all the advantages of same proven X-SWARM technology as in AGILOX ONE and AGILOX OCF: the unique advantages of an (intra-)logistics solution designed from the ground up.

Since AGILOX AMRs have no need for a central control system and can orient to the existing contours with millimeter precision, this eliminates time-consuming and costly modifications to the existing infrastructure. Autonomous route-finding also enables the vehicles to avoid obstacles unaided. If it is not possible to get past the detected obstacle due its size or the available clearance, the AMRs calculate a new route within seconds to reach their destination as quickly as possible. For customers, this means maximum freedom in their existing processes, because they do not have to adapt to the Autonomous Mobile Robot system. Instead, the system adapts to the customer’s processes. Furthermore, fully autonomous routing ensures a safe workflow – even in mixed operations.

Plug & Perform commissioning of the intelligent intralogistics solutions and the absence of a master computer or navigation aids also saves AGILOX customers from doing tedious alteration work in advance. Once the logistics robots have been put into operation, they organize themselves according to the (decentralized) principle of swarm intelligence, i.e. they exchange information several times a second to enable the entire fleet to calculate the most efficient route and prevent potential deadlocks before these can occur. The customer thus benefits from a system that constantly runs smoothly, with no downtime. Time-consuming coordination of vehicles by the customer is also a thing of the past thanks to AGILOX X-SWARM technology. For the customer, this means flexibility, because it lets them expand the vehicles‘ area of operation within just a few minutes. It also means that it is very easy to relocate an AGILOX AMR to be used temporarily in other areas of the company areas or its subsidiaries. Since AGILOX vehicles can also communicate with other machines or the building infrastructure by means of IO boxes, even rolling doors or multiple floors are no problem for the AMRs. So, this too means that customers enjoy maximum flexibility in the organization of their production processes.

Another major advantage comes from mixed operation of the AGILOX fleet in a “swarm”. The smaller AGILOX ONE and ODM series vehicles can then, for example, feed the assembly workstations or e-Kanban racks, while the AGILOX OCF vehicles transport the pallets. This can easily be done because AGILOX AMRs all use the same control and WiFi infrastructure.

Kivnon brings perfect Pallet Stacking to Logistics & Automation 2022

Kivnon will be presenting its most advanced and safest AGV/AMR Forklift at the event

21 September 2022, Barcelona: Kivnon, an international group specializing in automation and mobile robotics, is attending Logistics & Automation in Spain and will be showcasing it’s safe and versatile K55 AGV/AMR Forklift Pallet Stacker. Putting the emphasis on forklift safety, Kivnon K55 is equipped with advanced safety features to guarantee safe operations as it collaborates, moves, and reacts in a facility.

The Kivnon K55 is designed to move and stack palletized loads at low heights and performs cyclic or conditioned routes while interacting with other AGVs/AMRs, machines, systems, and people, making it a highly effective and safe solution. The model incorporates safety scanners that allow the vehicle to ensure 360-degree safety and operate seamlessly in shared spaces. The fork sensors help assess the possibility of correct loading or unloading of the pallet, keeping the transported goods safe.

Thierry Delmas, Managing Director at Kivnon, says, “AGVs/AMRs are revolutionizing internal logistics. The rising forklift safety challenge is of deep concern, and with the K55 we have taken a step forward to address the global issue. The Kivnon range is designed to ensure safe and reliable operations and to optimize operational efficiency.“

During the event, which runs from 26 – 27 October at IFEMA, Madrid, Kivnon will demonstrate the capabilities of the K55 Pallet Stacker. The vehicle can autonomously transport palletized loads of up 1,000 kg and lift them to heights of up to 1 meter. The vehicle is capable of performing cyclical or conditional circuits and interacting with other AGVs/AMRs, machines, and systems. Highly adaptable, the K55 is perfect for any open-bottom or euro-pallet storage application, receipt and dispatch of goods, and internal material transport. Its use will optimize safety, storage space, and process efficiency.

A robust industrial product, the K55 provides the reliability required to ensure continuity of production process and flexibility to adapt to specific application needs, with an online battery charging system that can function 24/7 with opportunity charges.

Delmas continues, “The Logistics and Automation show is an important networking event where customers can learn about the latest technologies and innovations. We pride ourselves on innovation and are excited to have this opportunity to showcase the capabilities of our products. In addition to the K55, our robust portfolio also includes twister units, car and heavy load tractors, low-height vehicles, and cart pullers, meeting multiple application needs”

The efficiency and precision of Kivnon AGVs/AMRs will be on display and Kivnon robotics experts will be available throughout the show to answer questions and arrange consultations at booth #3F43.

To register for the show, please visit https://www.logisticsautomationmadrid.com/en/

About Kivnon:

Kivnon offers a wide range of autonomous vehicles (AGVs/AMRs) and accessories for transporting goods, using magnetic navigation or mapping technologies that adapt to any environment and industry. The company offers an integrated solution with a wide range of mobile robotics solutions automating different applications within the automotive, food and beverage, logistics and warehousing, manufacturing, and aeronautics industries.

Kivnon products are characterized by their robustness, safety, precision, and high quality. A user-friendly design philosophy creates a pleasant, simple to install, and intuitive work experience.

Learn more about Kivnon mobile robots (AGVs/AMRs) here.

Austin-based Apptronik Inks Partnership with NASA for Humanoid Robots

AUSTIN, TEXAS (PRWEB) SEPTEMBER 20, 2022

Apptronik, an Austin-based company specializing in the development of versatile, mobile robotic systems, is announcing a partnership with NASA to accelerate commercialization of its new humanoid robot. The robot, called Apollo, will be one of the first humanoids available to the commercial markets.

At Apptronik’s headquarters in Austin, Texas, the first prototype of Apollo is now complete, with the expectation of broader commercial availability in 2023. Unlike special-purpose robots that are only capable of a single, repetitive task, Apollo is designed as a general-purpose robot capable of doing a wide range of tasks in dynamic environments. Apollo will benefit workers in industries ranging from logistics, retail, hospitality, aerospace and beyond.

NASA is known across the globe for its contributions to the advancement of robotics technology. NASA first partnered with Apptronik in 2013 during the DARPA Robotics Challenge (DRC), where founders were selected to work on NASA’s Valkyrie Robot. The government agency has now selected Apptronik as a commercial partner to launch a new generation of general-purpose robots, starting with Apollo.

“Continued investment from NASA validates the work we are doing at Apptronik and the inflection point we have reached in robotics. The robots we’ve all dreamed about are now here and ready to get out into the world,” said Jeff Cardenas, CEO and co-founder of Apptronik. “These robots will first become tools for us here on Earth, and will ultimately help us move beyond and explore the stars.”

In addition to its work with NASA, Apptronik’s team has partnered with leading automotive OEMs, major transportation and logistics companies, and government agencies. Boasting notable names including Dr. Nicholas Paine, Co-founder and Chief Technology Officer of Apptronik and Dr. Luis Sentis, Co-Founder and Scientific Advisor, its team is respected as among the best in the world. A growing hub for robotics, the Austin-based company continues to recruit top talent looking to bring their innovations to market now.

Apptronik is recognized for its emphasis on human-centered design, building beautifully designed and user-friendly robotic systems. As part of this commitment, it selected premier design firm argodesign as its partner in designing Apollo with the goal of creating robots capable of working alongside humans in our most critical industries. The team’s focus now is to scale Apollo so that it can be customer-ready in 2023.

About Apptronik:

Apptronik is a robotics company that has built a platform to deliver a variety of general-purpose robots. The company was founded in 2016 out of the Human Centered Robotics Lab at the University of Texas at Austin, with a mission to leverage innovative technology for the betterment of society. Its goal is to introduce the next generation of robots that will change the way people live and work, while tackling some of our world’s largest challenges. To learn more about careers at Apptronik, visit https://apptronik.com/careers/.

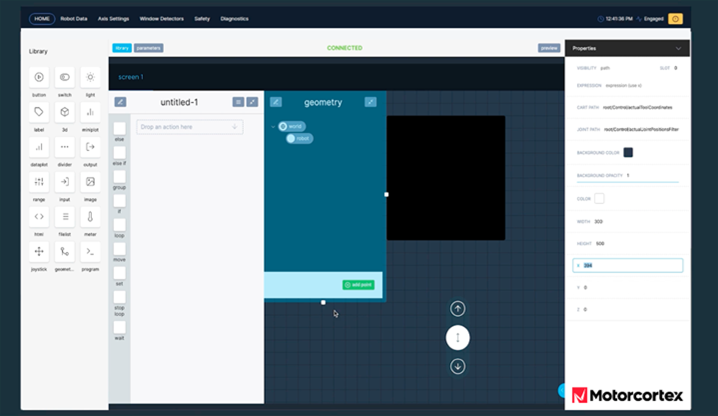

Robotersteuerung schnell und einfach in der Cloud programmiert –

Synapticon macht MOTORCORTEX als Online-Version verfügbar

Böblingen, den 04.08.2022 – Auch in der Welt des Maschinenbaus und der Robotik hat sich Software in den vergangenen Jahren zum entscheidenden Erfolgsfaktor entwickelt. Sowohl die Art und Weise wie Robotersteuerungen entwickelt werden als auch ihre Leistungsfähigkeit in der Praxis sind für die Hersteller von Industrierobotern von großer Bedeutung. Vor diesem Hintergrund hat Synapticon mit MOTORCORTEX.io nun eine bahnbrechende Lösung im SaaS (Software as a Service) Modell vorgestellt. Sie ermöglicht es sehr leistungsfähige, 100% individuelle Robotersteuerungen komfortabel in der Cloud zu entwickeln, auf Steuerungen im Feld bereitzustellen und über einen digitalen Zwilling zu testen. In der Serienproduktion des Roboters bzw. Automatisierungsprodukts kann die individuelle Steuerungs-Software dann in Masse bereitgestellt und auch offline betrieben werden. Dafür können neben Industrie-PCs auch Embedded-Module bis hin zu einem Raspberry Pi eingesetzt werden.

„Die Automatisierung hat in den vergangenen Jahren nochmals deutlich an Fahrt aufgenommen. Tragende Elemente sind dabei unter anderem fahrerlose Transportsysteme (AGV/AMR) sowie Cobots und Leichtbauroboter. Diese Systeme stellen nicht nur neue Herausforderungen an die Hardware, sondern auch an die Software, speziell wenn es um Themen wie Navigation, Sicherheit und das Erlernen von Abläufen geht“, erklärt Nikolai Ensslen, CEO und Gründer von Synapticon. „Die große Herausforderung ist nun für viele Hersteller: Sie müssen ihren Kunden Lösungen anbieten, die preislich attraktiv und immer auf dem neuesten Stand der Technik sind. Die Unternehmen sollen also in der Lage sein, individuelle Steuerungssoftware für ihre Systeme schnell und kosteneffizient zu entwickeln. Hierfür haben wir mit MOTORCORTEX nun eine Lösung im Angebot, die am Markt einmalig ist und welche die Entwicklungszeit von Robotersteuerungen drastisch verkürzt.“

Echtzeit-Steuerungsanwendungen in der Cloud erstellen und auf Offline-Steuerungen deployen sowie aus der Ferne warten

MOTORCORTEX umfasst ein ganzes Paket an Apps bzw. Templates und Tools zum Entwerfen, Steuern, Analysieren und Bereitstellen von industriellen Automatisierungsanwendungen. Dazu gehört beispielsweise auch ein Widget für die einfache grafische Programmierung (“no code”) von Robotern, was im Bereich der Cobots zum Standard wird. Die Plattform für individuelle Roboter- und Maschinensteuerungen ist mit modernster Softwaretechnologie implementiert, erfüllt alle Anforderungen der Automation der Zukunft und ist zugleich hoch leistungsfähig und skalierbar.

Das integrierte Linux-basierte und ressourcenoptimierte Betriebssystem bietet Echtzeitsteuerung von industrieller Hardware über EtherCAT, wie z.B. auf Synapticon SOMANET basierende Antriebsachsen und eine sehr leistungsstarke Kommunikationsschicht für Anwendungen auf höherer Ebene, wie z. B. eine Benutzeroberfläche oder Datenanalysetools. MOTORCORTEX ermöglicht Hochgeschwindigkeits-Streaming-Kommunikation direkt zum Webbrowser ohne Zwischenserver, was in der Industrie eine Wende darstellt. Es ist jetzt möglich, reaktionsschnelle Webanwendungen für eine extrem reibungslose Interaktion mit Maschinen zu erstellen. Die Lösung bietet offene APIs für alle wichtigen Programmiersprachen wie Javascript, Python und C++. Diese offene Architektur bietet viel mehr Freiheiten als aktuelle industrielle Steuerungssysteme und ermöglicht echte Industrie 4.0-Anwendungen mit nur wenigen Codezeilen. Die Kommunikation mit umgebenden bzw. höher liegenden Steuerungseinheiten wird über OPC UA unterstützt.

„Die Nutzung von MOTORCORTEX ist so einfach wie das Einrichten einer einfachen Webseite. Mit etwas Konfigurationsarbeit und ein paar Zeilen Code können sich Entwickler von jedem Webbrowser aus direkt und sicher mit ihrer Maschine verbinden und schnell Daten austauschen. Kein anderes industrielles Steuerungssystem ist so einfach und flexibel für anspruchsvolle und moderne Steuerungsaufgaben einzurichten“, erklärt Nikolai Ensslen. „Anwendungen, die auf MOTORCORTEX basieren, teilen alle ihre Daten automatisch in der darunterliegenden Echtzeitdatenbank, so dass externe Anwendungen oder Dienste einfach und sicher auf die Daten zugreifen können.“

Entwicklung beschleunigt, Kosten gesenkt

Erste Projekte mit Kunden zeigen, dass es Entwicklern mit MOTORCORTEX in der Cloud gelingt, den Entwicklungsprozess von Software um bis zu 90% zu reduzieren. Zugleich sinken tatsächlich die Kosten für die Softwareentwicklung deutlich, da MOTORCORTEX auf ein einfaches Lizenzmodell ohne zusätzliche Kosten für Wartung und Weiterentwicklung setzt. Da MOTORCORTEX zudem vollkommen Hardware-unabhängig ist, bleibt es den Entwicklern freigestellt, welche Hardware-Komponenten sie für die Entwicklung ihrer Steuerungssoftware nutzen. Ideale Resultate und höchste Effizienz verspricht dabei die Kombination der MOTORCORTEX-Software mit den SOMANET-Servoantrieben aus dem Motion Control-Portfolio von Synapticon.

MOTORCORTEX hat nicht den Anspruch, der eigenen Softwareentwicklung von Roboterherstellern oder innovativen Steuerungslösungen von Drittanbietern, etwa zum einfachen Teachen von Robotern oder für die Integration von Bildverarbeitung und KI, zuvor zu kommen bzw. diese zu ersetzen. Die Plattform soll vielmehr als solide Grundlage für diese dienen und die Entwickler in der Basis entlasten.

„MOTORCORTEX versteht sich, ebenso wie die SOMANET-Elektroniken, als im Endprodukt versteckte Infrastruktur. Sie soll ein leistungsfähiges, zuverlässiges Fundament für die modernsten und innovativsten Robotersteuerungen zur Verfügung stellen. Wir sehen uns als Technologie- und Infrastrukturpartner der besten Innovatoren in Robotik und Automation,” fasst Nikolai Ensslen zusammen. „Ich bin mir sicher, dass wie in vielen anderen Industriebereichen zukünftig Software auch in der Robotik zu einem wesentlichen und kritischen Unterscheidungsmerkmal wird. Mit MOTORCORTEX geben wir Unternehmen hierfür die beste Plattform in die Hand, so dass diese sich auf die relevanten Innovationen für Ihre Kunden und die Differenzierung von ihrem Wettbewerb konzentrieren können.“

Mehr Informationen unter www.synapticon.com

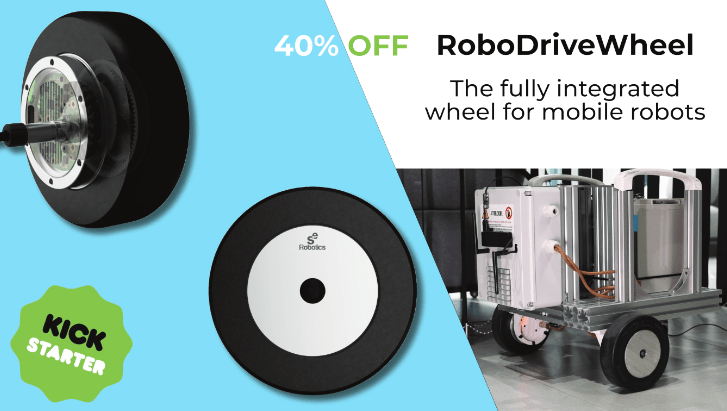

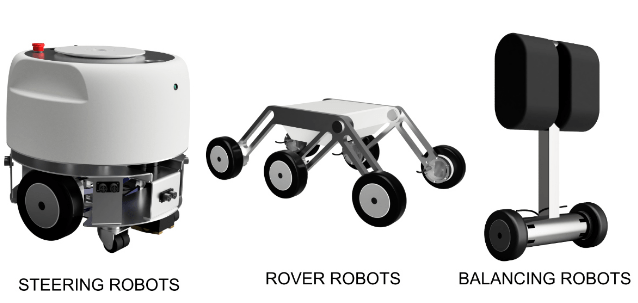

Kickstarter Campaign Announced for RoboDriveWheel: The fully integrated wheel for mobile robots

NAPLES, ITALY — S4E Robotics is pleased to announce the launch of a Kickstarter campaign that will allow the public to meet RoboDriveWheel: the fully integrated wheel for mobile robots. RoboDriveWheel is a new fully integrated motorized wheel designed specifically for the development of a new generation of safe and versatile service mobile robots.

„We believe RoboDriveWheel can help all robotics designers but also enthusiast and hobbyist to create powerful and smart mobile robots with little effort and reducing cost and time“ says Andrea Fontanelli inventor of RoboDriveWheel. „This Kickstarter campaign, to rise 43359€, will help us optimize the production process, manufacture our units and finalize our packaging components“.

RoboDroveWheel integrates Inside a Continental rubber with strong adhesion a powerful brushless motor, a high-efficiency planetary gearbox and a control board implementing state-of-the-art algorithms for torque and velocity control. Robodrivewheel can also detect impacts and collisions thanks to the combined use of torque, acceleration and inclination measurement obtained from the sensors integrated into the control board.

„RoboDriveWheel is the ideal solution for anyone who wants to build a mobile robot with little effort but which integrates the most modern technologies.“ says Roberto Iorio CFO of S4E Robotics. RoboDriveWheel is being produced by S4E Robotics, a company that specialised in industrial automation and robotics. They have designed and produced mobile robots such as ENDRIU: The compact and modular mobile robot for sanitization. The process of designing mobile service robots is highly time-consuming. A service robotic drive wheel must be compact, have good traction friction, must be powerful but fast enough to move the robot at a speed comparable to human speed.

Moreover, a robotic wheel must include all the functionalities, sensors, and electronics. Finally, a service robot must work close to humans so the robot should be capable to identify efficiently the interaction with the environment. Usually, all these capabilities require different components: the wheel, the shaft, one or more bearings with the housing, a traction system (pulley and belt), a motor with a reducer and encoder an IMU and the electronics with several cables for all the sensors.

RoboDriveWheel integrates all these functions inside the wheel and it is easy to connect and control through the single cable for power supply and can-bus communication, a protocol used for years in the automotive sector.

To learn more about Kickstarter and supporting RoboDriveWheel’s 43359€ campaign, please visit

https://www.kickstarter.com/projects/andreafontanelli/robodrivewheel

See RoboDriveWheel in action in our promoting video on youtube: https://www.youtube.com/watch?v=t4SOgimqY6U

Alltagshelfer der Zukunft: igus beschleunigt humanoide Robotik mit Low Cost Automation

Auf der Hannover Messe 2022 stellt igus den ersten Prototypen eines humanoiden Low Cost Roboters vor

Köln, 27. Mai 2022 – Mensch, Maschine – oder beides? Humanoide Roboter sind längst nicht mehr nur Science-Fiction, sondern Realität. Auch igus forscht bereits seit einiger Zeit an humanoider Robotik und stellt nun auf der Hannover Messe einen Prototypen des motion plastics bot vor: ein humanoider Roboter, der die Vorteile von Hochleistungskunststoffen und Low Cost Automation vereint.

Roboter sind aus unserem Alltag nicht mehr wegzudenken. Spätestens seit dem Wandel zur Industrie 4.0 werden immer mehr Aufgaben automatisiert – und davon profitieren auch neue Formen der Robotik. Doch Roboter können nicht nur in der Industrie, sondern auch im Alltag für Erleichterung sorgen. Ein Humanoid, der nicht nur funktionell, sondern auch freundlich ist und menschliche Züge trägt, kann den Menschen nicht als Maschine, sondern als Partner begleiten. In der Forschung und Entwicklung von humanoider Robotik gibt es stetig Fortschritte. Zum Beispiel bei einem Forschungsteam der TU Chemnitz, das eine E-Skin entwickelt – eine berührungsempfindliche elektronische Haut, die humanoide Roboter noch menschenähnlicher machen könnte. Immer getrieben von der Frage, in welche Richtung sich die Robotik weiterentwickeln kann, arbeitet auch igus seit einiger Zeit an der eigenen Vision eines humanoiden Roboters – dem motion plastics bot. „Mit dem igus ReBeL und unserem drytech Angebot waren bereits passende Komponenten vorhanden, um Bewegung in einen Roboter zu bringen. Der humanoide Roboter ist ein gemeinsames Projekt mit den Robotik-Experten des Stuttgarter Start-ups TruPhysics, das den intelligenten Humanoiden aus unseren motion plastics sowie weiteren Komponenten zusammengebaut hat. Dort ist er unter dem Namen Robert M3 erhältlich”, erklärt Alexander Mühlens, Leiter Geschäftsbereich Automatisierungstechnik und Robotik bei igus. „Mit dem Bot wollen wir das Zusammenspiel von unseren Produkten aus Hochleistungskunststoffen und integrierter Intelligenz aufzeigen – und das zu einem erschwinglichen Preis.“

Leichter und wartungsfreier Low Cost Humanoid

Für eine lange und störungsfreie Laufzeit ohne Wartung bieten die Tribo-Polymere von igus im motion plastics bot einen klaren Vorteil: Schmiermittelfreiheit. Gleichzeitig ermöglichen die Hochleistungskunststoffe eine leichte Bauweise. Durch ihren Einsatz bringt der motion plastics bot bei einer Höhe von bis zu 2,70 Meter lediglich 78 Kilogramm auf die Waage. Seine Spannweite beträgt 1,50 Meter. Der motion plastics bot verfügt über ein selbstfahrendes AGV (Automated Guided Vehicle), einen teleskopierbaren Körper sowie einen Kopf mit integriertem Bildschirm und Avatar für eine interaktive Kommunikation. Zentraler Bestandteil ist auch der igus ReBeL, ein Serviceroboter mit Cobot-Fähigkeiten, der als Arme des Bots zum Einsatz kommt. Das Herzstück des ReBeLs sind die vollintegrierten Tribo-Wellgetriebe aus Kunststoff mit Motor, Absolutwert-Encoder, Kraftregelung und Controller. Der motion plastics bot bewegt sich in Schrittgeschwindigkeit und verfügt über eine Traglast von 2 Kilogramm pro Arm. Angesteuert wird er als Open Source-Lösung über das Robot Operating System (ROS). Denn das gesamte Low Cost Automation-Angebot von igus lässt sich in ROS abbilden. Mit der Studie zum motion plastics bot vereint igus die Vorteile seiner Hochleistungskunststoffe für die Bewegung und sein Know-how im Bereich Low Cost Automation, um die Entwicklung der nächsten Robotergeneration weiter voranzutreiben.

Lebenslanger Begleiter statt nur Maschine

„Wir sehen viel Potenzial im Einsatz von humanoiden Robotern. Doch unsere Welt ist von Menschen für Menschen gebaut. Statt nur einzelne Automatisierungsteile zu nutzen, ist es daher sinnvoll an Humanoiden und Androiden zu forschen. Die Frage ist, wann ist der Markt soweit?“, macht Alexander Mühlens deutlich. Menschenähnliche Roboter können sowohl gefährliche als auch einfache und monotone Aufgaben übernehmen.Im beruflichen Umfeld können Arbeiten erledigt werden, die über ein bloßes Pick & Place, wie es Roboterarme verrichten, hinausgehen. Im Haushaltsbereich kann ein Bot mehrere Roboter ersetzen: Er könnte selbstständig staubsaugen, Rasen mähen, Einkäufe erledigen, kochen, Wäsche waschen und darüber hinaus alle möglichen Aufgaben erledigen – selbst die Pflege von kranken Menschen. Somit wäre er nicht nur eine Maschine, sondern ein Begleiter, der für eine Menschenleben lange Erleichterung sorgen könnte. „Der Einsatz eines solchen Roboters ist bisher noch mit hohen Kosten verbunden, berücksichtigt man jedoch die mögliche Lebensdauer, würde sich der Einsatz längerfristig amortisieren”, sagt Mühlens. „Unser Ziel ist es, mit motion plastics Komponenten kostengünstige und einfache Lösungen für humanoide Robotik aufzuzeigen.”

Teledyne FLIR Introduces Hadron 640R Dual Thermal-Visible Camera for Unmanned Systems

GOLETA, Calif. and ORLANDO, Fla. ― Teledyne FLIR, part of Teledyne Technologies Incorporated, today announced the release of its high-performance Hadron 640R combined radiometric thermal and visible dual camera module. The Hadron 640R design is optimized for integration into unmanned aircraft systems (UAS), unmanned ground vehicles (UGV), robotic platforms, and emerging AI-ready applications where battery life and run time are mission critical.

The 640 x 512 resolution Boson longwave infrared (LWIR) thermal camera inside the Hadron 640R can see through total darkness, smoke, most fog, glare, and provide temperature measurements for every pixel in the scene. The addition of the high definition 64 MP visible camera enables the Hadron 640R to provide both thermal and visible imagery compatible with today’s on-device processors for AI and machine-learning applications at the edge.

“The Hadron 640R provides integrators the opportunity to deploy a high-performance dual-camera module into a variety of unmanned form factors from UAS to UGV thanks to its incredibly small size, weight, and power requirement,” said Michael Walters, vice president product management, Teledyne FLIR. “It is designed to maximize efficiency and its IP-54 rating protects the module from intrusion of dust and water from the outside environment.”

The Hadron 640R reduces development costs and time-to-market for integrators and original equipment manufacturer (OEM) product developers by offering a complete system through a single supplier, Teledyne FLIR. This includes offering drivers for market-leading processors from NVIDIA, Qualcomm, and more, plus industry-leading integration support and service from a support team of experts. It also offers flexible 60 Hz video output via USB or MIPI compatibility. Hadron 640R is a dual use product and is classified under US Department of Commerce jurisdiction.

The Teledyne FLIR Hadron 640R is available for purchase globally from Teledyne FLIR and its authorized dealers. To learn more or to purchase, visit www.flir.com/hadron640r.

For an exclusive in-person first look at the Hadron 640R, please visit booth #2107 at AUVSI Xponential, April 26-28, 2022, in Orlando, Florida.

About Teledyne FLIR

Teledyne FLIR, a Teledyne Technologies company, is a world leader in intelligent sensing solutions for defense and industrial applications with approximately 4,000 employees worldwide. Founded in 1978, the company creates advanced technologies to help professionals make better, faster decisions that save lives and livelihoods. For more information, please visit www.teledyneflir.com or follow @flir.

About Teledyne Technologies

Teledyne Technologies is a leading provider of sophisticated digital imaging products and software, instrumentation, aerospace and defense electronics, and engineered systems. Teledyne’s operations are primarily located in the United States, the United Kingdom, Canada, and Western and Northern Europe. For more information, visit Teledyne’s website at www.teledyne.com.