Creality has lifted the veil over its latest 3D scanner. In an effort to further diversify its 3D cosmos, Creality, the well-known manufacturer of 3D printing-community-favorites such as the Ender 3, has announced its new and improved 3D scanner: the CR-Scan Lizard.

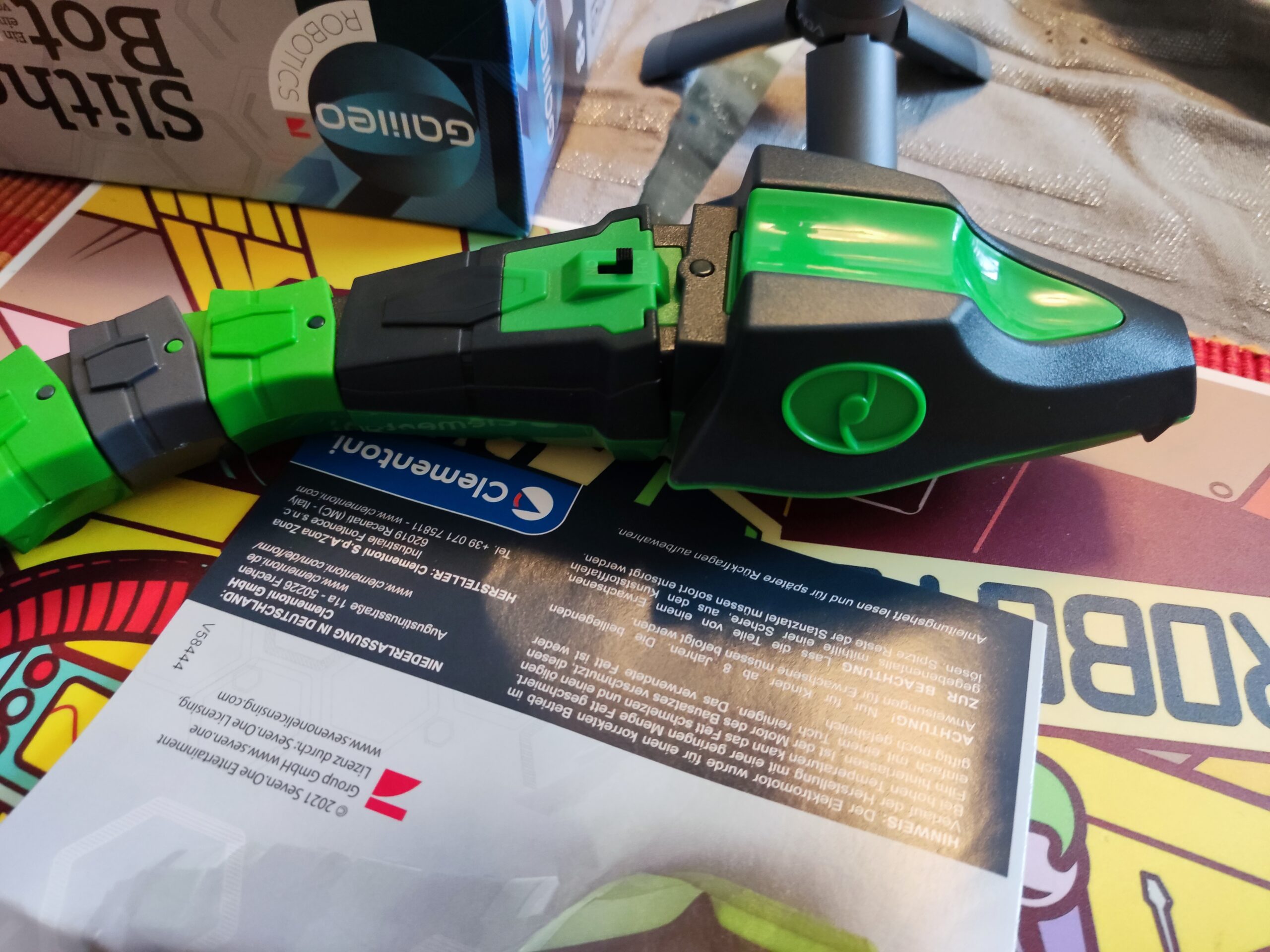

This entry-level 3D scanner for consumers follows the company’s CR-Scan 01 — which was released a fairly short time ago as an affordable option for users to digitalize objects. The new Lizard is smaller in size for better portability and feel but promises improved features such as accuracy up to 0.05 mm, and better handling of bright environments and dark objects. All that for less money than its predecessor, even.

With the Lizard, you can scan small or large objects with ease. The CR Studio software does the heavy lifting of optimizing models and even sends those files via the Creality Cloud directly to your 3D printer. The applications seem almost endless.

With some early bird specials, the CR-Scan Lizard has made a debut on Kickstarter on February 2022, and, unsurprisingly, smashed its campaign goal in next to no time.

We have gathered all the information revealed so far about this new consumer-grade 3D scanner to give you an overview of what the Lizard has in store. Creality has also already sent us a scanner to try for ourselves, so keep an eye out for our upcoming hands-on experience.

Features

HIGH ACCURACY

With the CR-Scan Lizard, Creality wants to bring professional-grade accuracy to the budget market. According to its spec sheet, the scanner has an accuracy of up to 0.05 mm allowing it to capture small parts and intricate details with high precision. Thanks to the scanner’s binoculars and improved precision calibration, Creality says it can pick up rich detail from objects as small as 15 x 15 x 15 mm, or as large as objects like car doors, engines, rear bumpers, and so on.

SCAN MODES

The CR-Scan Lizard comes with three different scanning modes. You can either use it in turntable mode, handheld mode, or a mixture of the two to scan an object.

Turntable mode is suitable for 15 – 300 mm objects and will scan automatically. You can use the combination mode for larger objects up to 500 mm, where you put the object on the rotary table but hold the scanner in hand to scan. Lastly, its handheld mode is suitable for scanning large objects up to 2 meters in size, such as the car parts mentioned above.

Plus, thanks to its visual tracking, the Lizard doesn’t need markers to work. You can scan objects without having to pin a bunch of stickers to them first — its software’s tracking algorithm will take care of that for you.

LIGHT OR DARK

Besides its scan modes, the Lizard also offers some improved scanning functions that should make it easier for users to achieve good results with minimal effort.

For one, Creality states the Lizard can scan accurately in sunlight. 3D scanners typically struggle with too much direct light, forcing users to scan in a darkened room for best results. However, Creality claims the Lizard, thanks to its multi-spectral optical technology, maintains excellent performance even in bright sunlight — which would vastly improve its field of application. The scanner can also be powered by a portable charger, so, in theory, you could go out there and scan the woods to your heart desire.

What’s more, the CR-Scan Lizard promises better material adaptability when scanning black and dark objects. Sounds like it’s got it all.

COLOR MAPPING

Creality has stated that it is planning to release a fully automated color mapping texture suite in March 2022 that promises true color fidelity for your scanned objects, but its currently still in development. Once released, you can make use of the mapping process, where high-definition color pictures of the model taken with a phone or DSLR camera can automatically be mapped onto the 3D model, allowing you to create high-quality, vivid color scans.

CR STUDIO

The Lizard’s accompanying software, CR Studio, promises many features that should help to achieve clean scans. For example, the software features on-click model optimization and multi-positional auto alignment, auto noise removal, topology simplified, texture mapping, and much more.

You can also upload and share models via the Creality Cloud, allowing you to slice your scanned objects and even send them to a 3D printer — all with the click of a button.

Release Date & Availability

Creality has set up a limited pre-order via Kickstarter. The scanner is available for backing since February 10, 2022, alongside some early bird batch sales. According to the Kickstarter campaign, shipping will take place in April.

Over the past days and weeks, Creality has already released a couple of videos on its YouTube channel showing off the scanner’s features in greater detail. Be sure to check those out if the Lizard tickles your fancy.

Creality has also already sent All3DP a CR-Scan Lizard to try out, so we are looking forward to giving it a spin in the next few days. Stay tuned for a full review of our hands-on experience.

At the time of writing, the CR-Scan Lizard is available via Kickstarter with super early bird pledges, priced from $300 for the most basic Lizard package and reaching $400 for the luxury version that already comes with a color kit.

According to the campaign, the off-the-shelf price for the Lizard will be $599 for its base version. So, there are potentially some bucks to be saved if you get in early. However, it wouldn’t be the first time that prices given changed eventually.

Here are the technical specifications for the Creality CR-Scan Lizard 3D scanner:

GENERAL SPECIFICATIONS

- Precision: 0.05 mm

- Resolution ratio: 0.1 – 0.2 mm

- Single capture range: 200 x 100 mm

- Operating Distance: 150 – 400 mm

- Scanning Speed: 10 fps

- Tracking mode: Visual tracking

- Light: LED+NIR (Near-infrared mode)

- Splicing Mode: Fully automatic geometry and visual tracking (without marker)

OUTPUT

- Output Format: STL, OBJ, PLY

- Compatible System: Win 10 64bit (MacOS to be released in March 2022)

COMMON SPECIFICATIONS

- Machine Size: 155 x 84 x 46 mm

- Machine Weight: 370 g

https://www.kickstarter.com/projects/3dprintmill/creality-cr-scan-lizard-capturing-fine-details-of-view