AI-driven drone from University of Klagenfurt uses IDS uEye camera for real-time, object-relative navigation—enabling safer, more efficient, and precise inspections.

The inspection of critical infrastructures such as energy plants, bridges or industrial complexes is essential to ensure their safety, reliability and long-term functionality. Traditional inspection methods always require the use of people in areas that are difficult to access or risky. Autonomous mobile robots offer great potential for making inspections more efficient, safer and more accurate. Uncrewed aerial vehicles (UAVs) such as drones in particular have become established as promising platforms, as they can be used flexibly and can even reach areas that are difficult to access from the air. One of the biggest challenges here is to navigate the drone precisely relative to the objects to be inspected in order to reliably capture high-resolution image data or other sensor data.

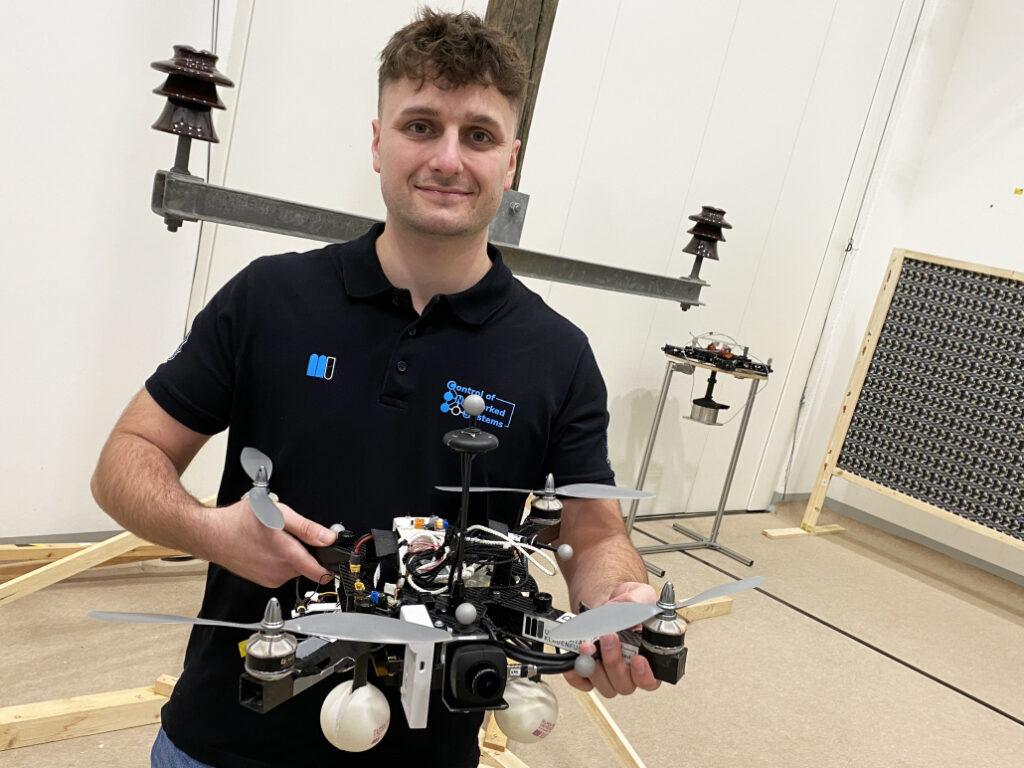

A research group at the University of Klagenfurt has designed a real-time capable drone based on object-relative navigation using artificial intelligence. Also on board: a USB3 Vision industrial camera from the uEye LE family from IDS Imaging Development Systems GmbH.

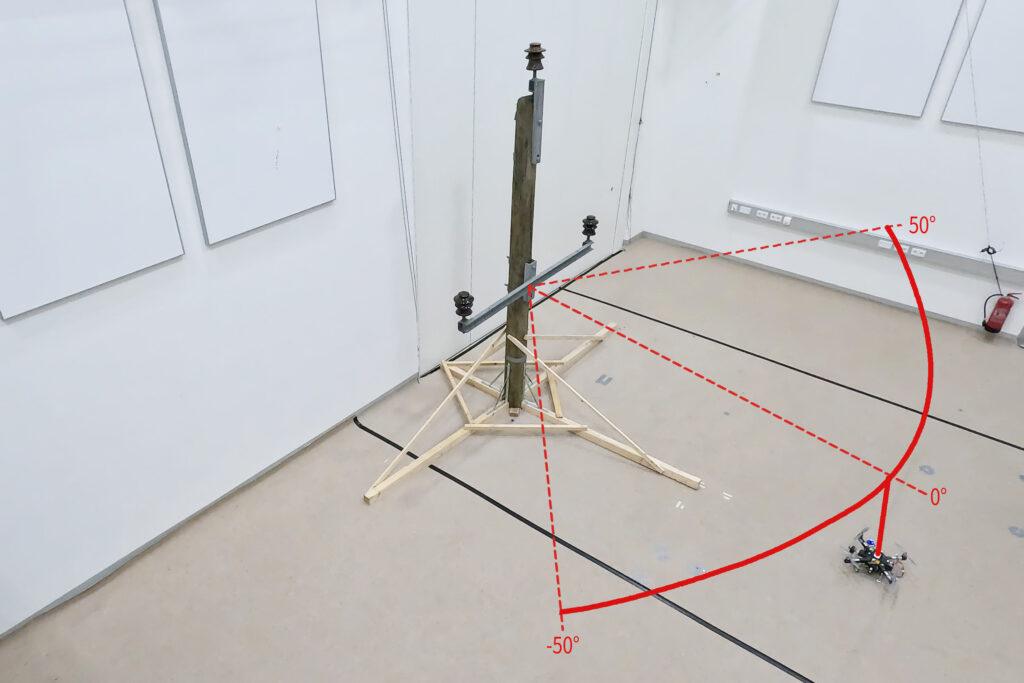

As part of the research project, which was funded by the Austrian Federal Ministry for Climate Action, Environment, Energy, Mobility, Innovation and Technology (BMK), the drone must autonomously recognise what is a power pole and what is an insulator on the power pole. It will fly around the insulator at a distance of three meters and take pictures. „Precise localisation is important such that the camera recordings can also be compared across multiple inspection flights,“ explains Thomas Georg Jantos, PhD student and member of the Control of Networked Systems research group at the University of Klagenfurt. The prerequisite for this is that object-relative navigation must be able to extract so-called semantic information about the objects in question from the raw sensory data captured by the camera. Semantic information makes raw data, in this case the camera images, „understandable“ and makes it possible not only to capture the environment, but also to correctly identify and localise relevant objects.

In this case, this means that an image pixel is not only understood as an independent colour value (e.g. RGB value), but as part of an object, e.g. an isolator. In contrast to classic GNNS (Global Navigation Satellite System), this approach not only provides a position in space, but also a precise relative position and orientation with respect to the object to be inspected (e.g. „Drone is located 1.5m to the left of the upper insulator“).

The key requirement is that image processing and data interpretation must be latency-free so that the drone can adapt its navigation and interaction to the specific conditions and requirements of the inspection task in real time.

Semantic information through intelligent image processing

Object recognition, object classification and object pose estimation are performed using artificial intelligence in image processing. „In contrast to GNSS-based inspection approaches using drones, our AI with its semantic information enables the inspection of the infrastructure to be inspected from certain reproducible viewpoints,“ explains Thomas Jantos. „In addition, the chosen approach does not suffer from the usual GNSS problems such as multi-pathing and shadowing caused by large infrastructures or valleys, which can lead to signal degradation and thus to safety risks.“

How much AI fits into a small quadcopter?

The hardware setup consists of a TWINs Science Copter platform equipped with a Pixhawk PX4 autopilot, an NVIDIA Jetson Orin AGX 64GB DevKit as on-board computer and a USB3 Vision industrial camera from IDS. „The challenge is to get the artificial intelligence onto the small helicopters.

The computers on the drone are still too slow compared to the computers used to train the AI. With the first successful tests, this is still the subject of current research,“ says Thomas Jantos, describing the problem of further optimising the high-performance AI model for use on the on-board computer.

The camera, on the other hand, delivers perfect basic data straight away, as the tests in the university’s own drone hall show. When selecting a suitable camera model, it was not just a question of meeting the requirements in terms of speed, size, protection class and, last but not least, price. „The camera’s capabilities are essential for the inspection system’s innovative AI-based navigation algorithm,“ says Thomas Jantos. He opted for the U3-3276LE C-HQ model, a space-saving and cost-effective project camera from the uEye LE family. The integrated Sony Pregius IMX265 sensor is probably the best CMOS image sensor in the 3 MP class and enables a resolution of 3.19 megapixels (2064 x 1544 px) with a frame rate of up to 58.0 fps. The integrated 1/1.8″ global shutter, which does not produce any ‚distorted‘ images at these short exposure times compared to a rolling shutter, is decisive for the performance of the sensor. „To ensure a safe and robust inspection flight, high image quality and frame rates are essential,“ Thomas Jantos emphasises. As a navigation camera, the uEye LE provides the embedded AI with the comprehensive image data that the on-board computer needs to calculate the relative position and orientation with respect to the object to be inspected. Based on this information, the drone is able to correct its pose in real time.

The IDS camera is connected to the on-board computer via a USB3 interface. „With the help of the IDS peak SDK, we can integrate the camera and its functionalities very easily into the ROS (Robot Operating System) and thus into our drone,“ explains Thomas Jantos. IDS peak also enables efficient raw image processing and simple adjustment of recording parameters such as auto exposure, auto white Balancing, auto gain and image downsampling.

To ensure a high level of autonomy, control, mission management, safety monitoring and data recording, the researchers use the source-available CNS Flight Stack on the on-board computer. The CNS Flight Stack includes software modules for navigation, sensor fusion and control algorithms and enables the autonomous execution of reproducible and customisable missions. „The modularity of the CNS Flight Stack and the ROS interfaces enable us to seamlessly integrate our sensors and the AI-based ’state estimator‘ for position detection into the entire stack and thus realise autonomous UAV flights. The functionality of our approach is being analysed and developed using the example of an inspection flight around a power pole in the drone hall at the University of Klagenfurt,“ explains Thomas Jantos.

Precise, autonomous alignment through sensor fusion

The high-frequency control signals for the drone are generated by the IMU (Inertial Measurement Unit). Sensor fusion with camera data, LIDAR or GNSS (Global Navigation Satellite System) enables real-time navigation and stabilisation of the drone – for example for position corrections or precise alignment with inspection objects. For the Klagenfurt drone, the IMU of the PX4 is used as a dynamic model in an EKF (Extended Kalman Filter). The EKF estimates where the drone should be now based on the last known position, speed and attitude. New data (e.g. from IMU, GNSS or camera) is then recorded at up to 200 Hz and incorprated into the state estimation process.

The camera captures raw images at 50 fps and an image size of 1280 x 960px. „This is the maximum frame rate that we can achieve with our AI model on the drone’s onboard computer,“ explains Thomas Jantos. When the camera is started, an automatic white balance and gain adjustment are carried out once, while the automatic exposure control remains switched off. The EKF compares the prediction and measurement and corrects the estimate accordingly. This ensures that the drone remains stable and can maintain its position autonomously with high precision.

Outlook

„With regard to research in the field of mobile robots, industrial cameras are necessary for a variety of applications and algorithms. It is important that these cameras are robust, compact, lightweight, fast and have a high resolution. On-device pre-processing (e.g. binning) is also very important, as it saves valuable computing time and resources on the mobile robot,“ emphasises Thomas Jantos.

With corresponding features, IDS cameras are helping to set a new standard in the autonomous inspection of critical infrastructures in this promising research approach, which significantly increases safety, efficiency and data quality.

The Control of Networked Systems (CNS) research group is part of the Institute for Intelligent System Technologies. It is involved in teaching in the English-language Bachelor’s and Master’s programs „Robotics and AI“ and „Information and Communications Engineering (ICE)“ at the University of Klagenfurt. The group’s research focuses on control engineering, state estimation, path and motion planning, modeling of dynamic systems, numerical simulations and the automation of mobile robots in a swarm: More information

Model used:USB3 Vision Industriekamera U3-3276LE Rev.1.2

Camera family: uEye LE

Image rights: Alpen-Adria-Universität (aau) Klagenfurt

© 2025 IDS Imaging Development Systems GmbH