Schlagwort-Archiv: IDS

Enhancing Drone Navigation with AI and IDS uEye Camera Technology

AI-driven drone from University of Klagenfurt uses IDS uEye camera for real-time, object-relative navigation—enabling safer, more efficient, and precise inspections.

The inspection of critical infrastructures such as energy plants, bridges or industrial complexes is essential to ensure their safety, reliability and long-term functionality. Traditional inspection methods always require the use of people in areas that are difficult to access or risky. Autonomous mobile robots offer great potential for making inspections more efficient, safer and more accurate. Uncrewed aerial vehicles (UAVs) such as drones in particular have become established as promising platforms, as they can be used flexibly and can even reach areas that are difficult to access from the air. One of the biggest challenges here is to navigate the drone precisely relative to the objects to be inspected in order to reliably capture high-resolution image data or other sensor data.

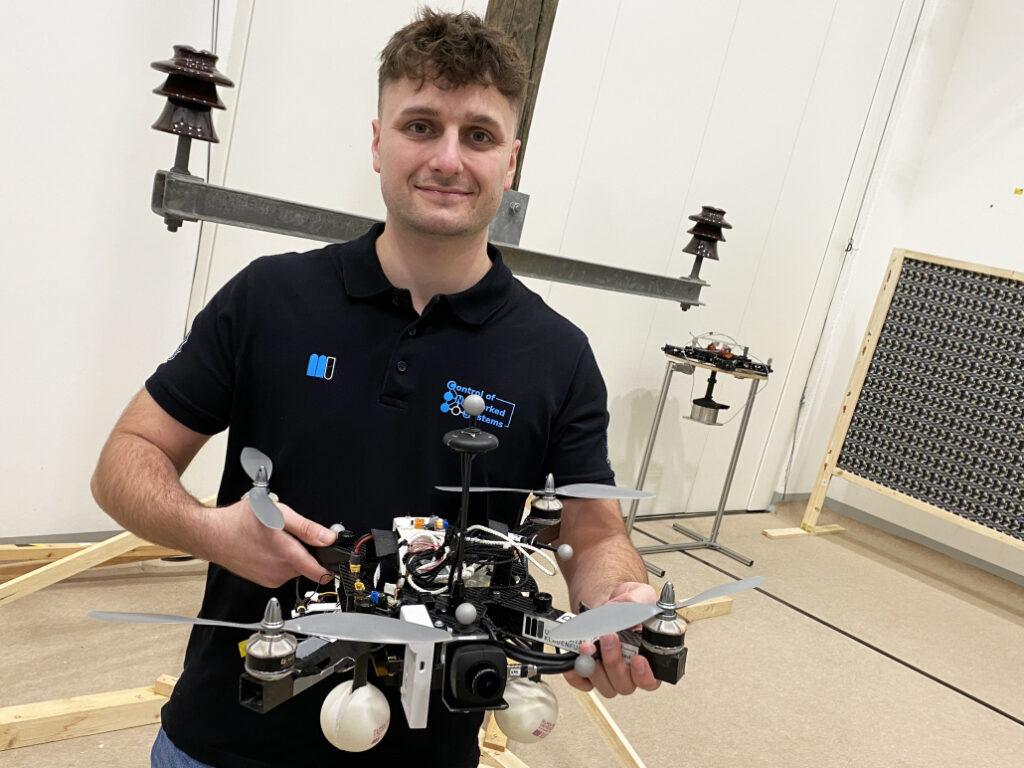

A research group at the University of Klagenfurt has designed a real-time capable drone based on object-relative navigation using artificial intelligence. Also on board: a USB3 Vision industrial camera from the uEye LE family from IDS Imaging Development Systems GmbH.

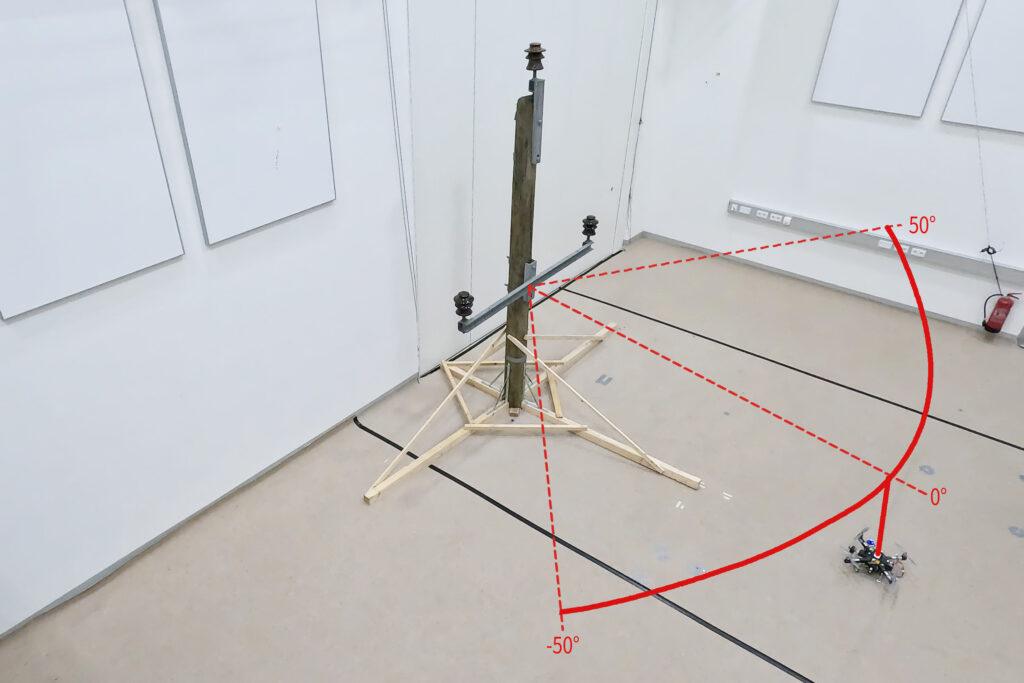

As part of the research project, which was funded by the Austrian Federal Ministry for Climate Action, Environment, Energy, Mobility, Innovation and Technology (BMK), the drone must autonomously recognise what is a power pole and what is an insulator on the power pole. It will fly around the insulator at a distance of three meters and take pictures. „Precise localisation is important such that the camera recordings can also be compared across multiple inspection flights,“ explains Thomas Georg Jantos, PhD student and member of the Control of Networked Systems research group at the University of Klagenfurt. The prerequisite for this is that object-relative navigation must be able to extract so-called semantic information about the objects in question from the raw sensory data captured by the camera. Semantic information makes raw data, in this case the camera images, „understandable“ and makes it possible not only to capture the environment, but also to correctly identify and localise relevant objects.

In this case, this means that an image pixel is not only understood as an independent colour value (e.g. RGB value), but as part of an object, e.g. an isolator. In contrast to classic GNNS (Global Navigation Satellite System), this approach not only provides a position in space, but also a precise relative position and orientation with respect to the object to be inspected (e.g. „Drone is located 1.5m to the left of the upper insulator“).

The key requirement is that image processing and data interpretation must be latency-free so that the drone can adapt its navigation and interaction to the specific conditions and requirements of the inspection task in real time.

Semantic information through intelligent image processing

Object recognition, object classification and object pose estimation are performed using artificial intelligence in image processing. „In contrast to GNSS-based inspection approaches using drones, our AI with its semantic information enables the inspection of the infrastructure to be inspected from certain reproducible viewpoints,“ explains Thomas Jantos. „In addition, the chosen approach does not suffer from the usual GNSS problems such as multi-pathing and shadowing caused by large infrastructures or valleys, which can lead to signal degradation and thus to safety risks.“

How much AI fits into a small quadcopter?

The hardware setup consists of a TWINs Science Copter platform equipped with a Pixhawk PX4 autopilot, an NVIDIA Jetson Orin AGX 64GB DevKit as on-board computer and a USB3 Vision industrial camera from IDS. „The challenge is to get the artificial intelligence onto the small helicopters.

The computers on the drone are still too slow compared to the computers used to train the AI. With the first successful tests, this is still the subject of current research,“ says Thomas Jantos, describing the problem of further optimising the high-performance AI model for use on the on-board computer.

The camera, on the other hand, delivers perfect basic data straight away, as the tests in the university’s own drone hall show. When selecting a suitable camera model, it was not just a question of meeting the requirements in terms of speed, size, protection class and, last but not least, price. „The camera’s capabilities are essential for the inspection system’s innovative AI-based navigation algorithm,“ says Thomas Jantos. He opted for the U3-3276LE C-HQ model, a space-saving and cost-effective project camera from the uEye LE family. The integrated Sony Pregius IMX265 sensor is probably the best CMOS image sensor in the 3 MP class and enables a resolution of 3.19 megapixels (2064 x 1544 px) with a frame rate of up to 58.0 fps. The integrated 1/1.8″ global shutter, which does not produce any ‚distorted‘ images at these short exposure times compared to a rolling shutter, is decisive for the performance of the sensor. „To ensure a safe and robust inspection flight, high image quality and frame rates are essential,“ Thomas Jantos emphasises. As a navigation camera, the uEye LE provides the embedded AI with the comprehensive image data that the on-board computer needs to calculate the relative position and orientation with respect to the object to be inspected. Based on this information, the drone is able to correct its pose in real time.

The IDS camera is connected to the on-board computer via a USB3 interface. „With the help of the IDS peak SDK, we can integrate the camera and its functionalities very easily into the ROS (Robot Operating System) and thus into our drone,“ explains Thomas Jantos. IDS peak also enables efficient raw image processing and simple adjustment of recording parameters such as auto exposure, auto white Balancing, auto gain and image downsampling.

To ensure a high level of autonomy, control, mission management, safety monitoring and data recording, the researchers use the source-available CNS Flight Stack on the on-board computer. The CNS Flight Stack includes software modules for navigation, sensor fusion and control algorithms and enables the autonomous execution of reproducible and customisable missions. „The modularity of the CNS Flight Stack and the ROS interfaces enable us to seamlessly integrate our sensors and the AI-based ’state estimator‘ for position detection into the entire stack and thus realise autonomous UAV flights. The functionality of our approach is being analysed and developed using the example of an inspection flight around a power pole in the drone hall at the University of Klagenfurt,“ explains Thomas Jantos.

Precise, autonomous alignment through sensor fusion

The high-frequency control signals for the drone are generated by the IMU (Inertial Measurement Unit). Sensor fusion with camera data, LIDAR or GNSS (Global Navigation Satellite System) enables real-time navigation and stabilisation of the drone – for example for position corrections or precise alignment with inspection objects. For the Klagenfurt drone, the IMU of the PX4 is used as a dynamic model in an EKF (Extended Kalman Filter). The EKF estimates where the drone should be now based on the last known position, speed and attitude. New data (e.g. from IMU, GNSS or camera) is then recorded at up to 200 Hz and incorprated into the state estimation process.

The camera captures raw images at 50 fps and an image size of 1280 x 960px. „This is the maximum frame rate that we can achieve with our AI model on the drone’s onboard computer,“ explains Thomas Jantos. When the camera is started, an automatic white balance and gain adjustment are carried out once, while the automatic exposure control remains switched off. The EKF compares the prediction and measurement and corrects the estimate accordingly. This ensures that the drone remains stable and can maintain its position autonomously with high precision.

Outlook

„With regard to research in the field of mobile robots, industrial cameras are necessary for a variety of applications and algorithms. It is important that these cameras are robust, compact, lightweight, fast and have a high resolution. On-device pre-processing (e.g. binning) is also very important, as it saves valuable computing time and resources on the mobile robot,“ emphasises Thomas Jantos.

With corresponding features, IDS cameras are helping to set a new standard in the autonomous inspection of critical infrastructures in this promising research approach, which significantly increases safety, efficiency and data quality.

The Control of Networked Systems (CNS) research group is part of the Institute for Intelligent System Technologies. It is involved in teaching in the English-language Bachelor’s and Master’s programs „Robotics and AI“ and „Information and Communications Engineering (ICE)“ at the University of Klagenfurt. The group’s research focuses on control engineering, state estimation, path and motion planning, modeling of dynamic systems, numerical simulations and the automation of mobile robots in a swarm: More information

Model used:USB3 Vision Industriekamera U3-3276LE Rev.1.2

Camera family: uEye LE

Image rights: Alpen-Adria-Universität (aau) Klagenfurt

© 2025 IDS Imaging Development Systems GmbH

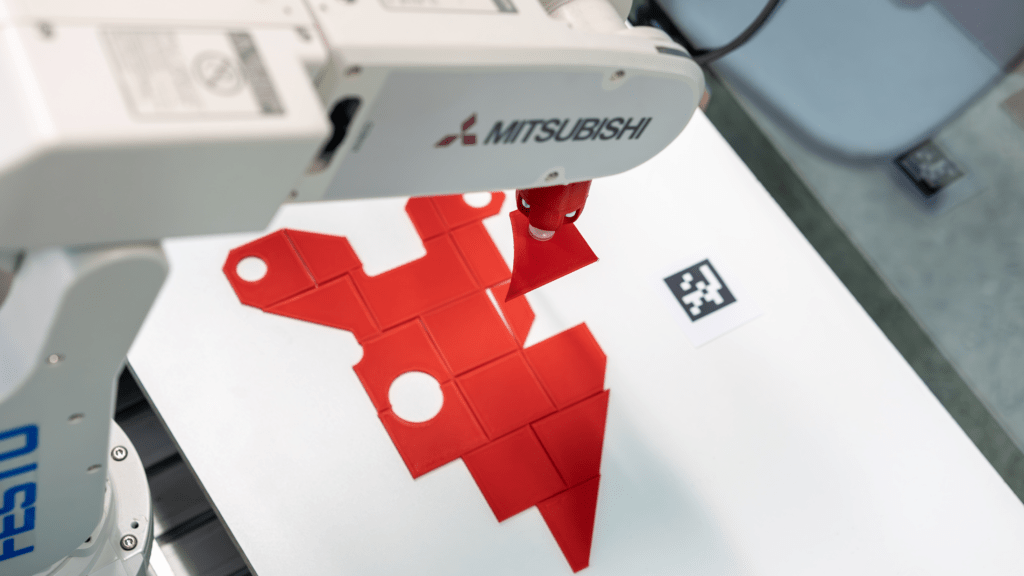

Roboter automatisiert Pick-and-Place-Prozesse mit KI-gestützter Bildverarbeitung

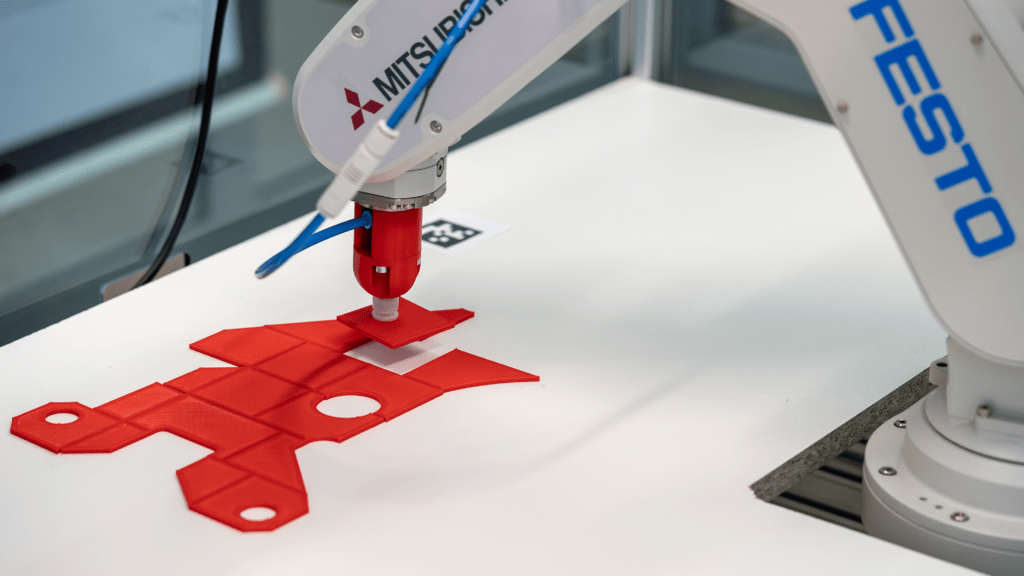

Pick-and-Place-Anwendungen sind ein zentrales Einsatzgebiet der Robotik. Sie werden häufig in der Industrie genutzt, um Montageprozesse zu beschleunigen und manuelle Tätigkeiten zu reduzieren – ein spannendes Thema für Informatik Masteranden des Instituts für datenoptimierte Fertigung der Hochschule Kempten. Sie entwickelten einen Roboter, der Prozesse durch den Einsatz von künstlicher Intelligenz und Computer Vision optimiert. Auf Basis einer Montagezeichnung ist das System in der Lage, einzelne Bauteile zu greifen und an vorgegebener Stelle abzulegen – vergleichbar mit einem Puzzle. Anschließend können die Teile dort manuell durch einen Mitarbeiter verklebt werden.

Zwei IDS Industriekameras liefern die nötigen Bildinformationen

Mithilfe von zwei uEye XC Kameras und einer KI-gestützten Bildverarbeitung analysiert das System die Umgebung und berechnet präzise Aufnahme- sowie Ablagekoordinaten. Eine der Kameras wurde dazu über der Arbeitsfläche platziert, die andere über der Entnahmestelle. Konkret verarbeitet eine KI-Pipeline die Bilder der beiden Kameras in mehreren Schritten, um die exakte Lage und Ausrichtung der Objekte zu bestimmen. Mithilfe der Computer-Vision-Algorithmen und neuronalen Netzen erkennt das System relevante Merkmale, berechnet die optimalen Greifpunkte und generiert präzise Koordinaten für die Aufnahme und Ablage der Objekte. Zudem identifiziert das System die Teile eindeutig, indem es ihre Oberfläche segmentiert und die Konturen mit einer Datenbank abgleicht. Darüber hinaus nutzt es die Ergebnisse, um eine Annäherung an bereits abgelegte Teile zu ermöglichen. Die Automatisierungslösung reduziert damit die Abhängigkeit von Expertenwissen, verkürzt Prozesszeiten und wirkt dem Fachkräftemangel entgegen.

Kameraanforderungen

Schnittstelle, Sensor, Baugröße und Preis waren die Kriterien, die für die Wahl des Kameramodells entscheidend waren. Die uEye XC kombiniert die Benutzerfreundlichkeit einer Webcam mit der Leistungsfähigkeit einer Industriekamera. Sie erfordert lediglich eine Kabelverbindung für den Betrieb. Ausgestattet mit einem 13-MP-onsemi-Sensor (AR1335) liefert die Autofokus-Kamera hochauflösende Bilder und Videos. Eine wechselbare Makro-Aufsatzlinse ermöglicht eine verkürzte Objektdistanz, wodurch die Kamera auch für Nahbereichsanwendungen geeignet ist. Auch ihre Einbindung war denkbar einfach, wie Raphael Seliger, Wissenschaftlicher Mitarbeiter der Hochschule Kempten, erklärt: „Wir binden die Kameras über die IDS peak Schnittstelle an unser Python Backend an.“

Ausblick

Zukünftig soll das System durch Reinforcement Learning weiterentwickelt werden – einer Methode des maschinellen Lernens, die auf Lernen durch Versuch und Irrtum beruht. „Wir möchten gerne die KI-Funktionen ausbauen, um die Pick-and-Place Vorgänge intelligenter zu gestalten. Unter Umständen benötigen wir dafür eine zusätzliche Kamera direkt am Roboterarm“, erläutert Seliger. Geplant ist zudem eine automatische Genauigkeitsprüfung der abgelegten Teile. Langfristig soll der Roboter allein anhand der Montagezeichnung alle erforderlichen Schritte eigenständig ausführen können.

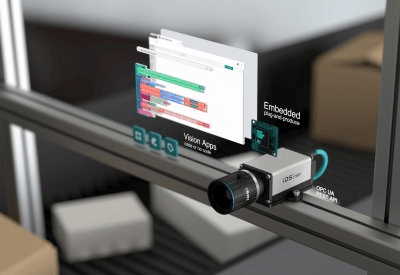

IDS NXT malibu now available with the 8 MP Sony Starvis 2 sensor IMX678

Intelligent industrial camera with 4K streaming and excellent low-light performance

IDS expands its product line for intelligent image processing and launches a new IDS NXT malibu camera. It enables AI-based image processing, video compression and streaming in full 4K sensor resolution at 30 fps – directly in and out of the camera. The 8 MP sensor IMX678 is part of the Starvis 2 series from Sony. It ensures impressive image quality even in low light conditions and twilight.

Industrial camera with live AI: IDS NXT malibu is able to independently perform AI-based image analyses and provide the results as live overlays in compressed video streams via RTSP (Real Time Streaming Protocol). Hidden inside is a special SoC (system-on-a-chip) from Ambarella, which is known from action cameras. An ISP with helpful automatic features such as brightness, noise and colour correction ensures that optimum image quality is attained at all times. The new 8 MP camera complements the recently introduced camera variant with the 5 MP onsemi sensor AR0521.

To coincide with the market launch of the new model, IDS Imaging Development Systems has also published a new software release. Users now also have have the the option of displaying live images from the IDS NXT malibu camera models via MJPEG-compressed HTTP stream. This enables visualisation in any web browser without additional software or plug-ins. In addition, the AI vision studio IDS lighthouse can be used to train individual neural networks for the Ambarella SoC of the camera family. This simplifies the use of the camera for AI-based image analyses with classification, object recognition and anomaly detection methods.

IDS NXT malibu: Camera combines advanced consumer image processing and AI technology from Ambarella and industrial quality from IDS

New class of edge AI industrial cameras allows AI overlays in live video streams

IDS NXT malibu marks a new class of intelligent industrial cameras that act as edge devices and generate AI overlays in live video streams. For the new camera series, IDS Imaging Development Systems has collaborated with Ambarella, leading developer of visual AI products, making consumer technology available for demanding applications in industrial quality. It features Ambarella’s CVflow® AI vision system on chip and takes full advantage of the SoC’s advanced image processing and on-camera AI capabilities. Consequently, Image analysis can be performed at high speed (>25fps) and displayed as live overlays in compressed video streams via the RTSP protocol for end devices.

Thanks to the SoC’s integrated image signal processor (ISP), the information captured by the light-sensitive onsemi AR0521 image sensor is processed directly on the camera and accelerated by its integrated hardware. The camera also offers helpful automatic features, such as brightness, noise and colour correction, which significantly improve image quality.

„With IDS NXT malibu, we have developed an industrial camera that can analyse images in real time and incorporate results directly into video streams,” explained Kai Hartmann, Product Innovation Manager at IDS. “The combination of on-camera AI with compression and streaming is a novelty in the industrial setting, opening up new application scenarios for intelligent image processing.“

These on-camera capabilities were made possible through close collaboration between IDS and Ambarella, leveraging the companies’ strengths in industrial camera and consumer technology. „We are proud to work with IDS, a leading company in industrial image processing,” said Jerome Gigot, senior director of marketing at Ambarella. “The IDS NXT malibu represents a new class of industrial-grade edge AI cameras, achieving fast inference times and high image quality via our CVflow AI vision SoC.“

IDS NXT malibu has entered series production. The camera is part of the IDS NXT all-in-one AI system. Optimally coordinated components – from the camera to the AI vision studio – accompany the entire workflow. This includes the acquisition of images and their labelling, through to the training of a neural network and its execution on the IDS NXT series of cameras.

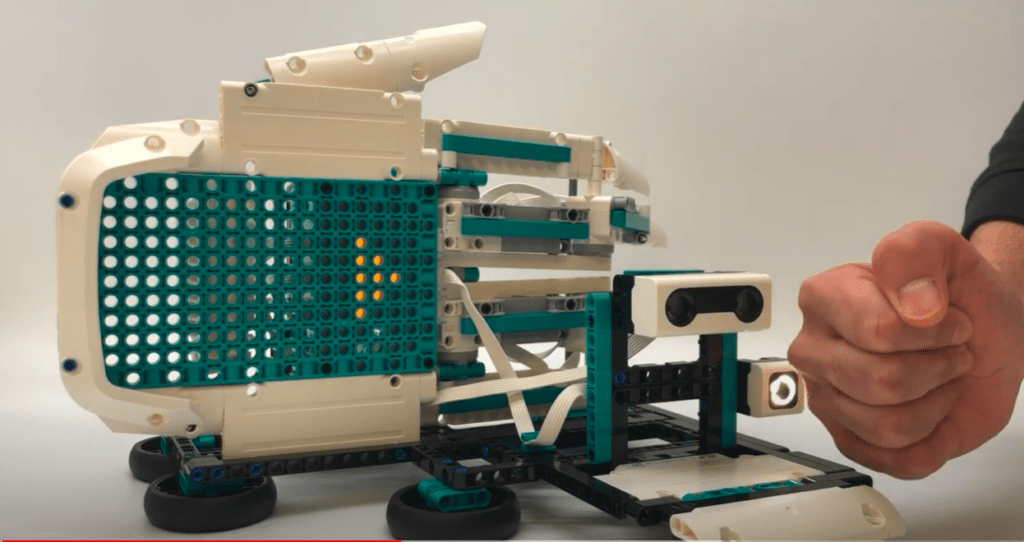

Robot plays „Rock, Paper, Scissors“ – Part 1/3

Gesture recognition with intelligent camera

I am passionate about technology and robotics. Here in my own blog, I am always taking on new tasks. But I have hardly ever worked with image processing. However, a colleague’s LEGO® MINDSTORMS® robot, which can recognize the rock, paper or scissors gestures of a hand with several different sensors, gave me an idea: „The robot should be able to ’see‘.“ Until now, the respective gesture had to be made at a very specific point in front of the robot in order to be reliably recognized. Several sensors were needed for this, which made the system inflexible and dampened the joy of playing. Can image processing solve this task more „elegantly“?

From the idea to implementation

In my search for a suitable camera, I came across IDS NXT – a complete system for the use of intelligent image processing. It fulfilled all my requirements and, thanks to artificial intelligence, much more besides pure gesture recognition. My interest was woken. Especially because the evaluation of the images and the communication of the results took place directly on or through the camera – without an additional PC! In addition, the IDS NXT Experience Kit came with all the components needed to start using the application immediately – without any prior knowledge of AI.

I took the idea further and began to develop a robot that would play the game „Rock, Paper, Scissors“ in the future – with a process similar to that in the classical sense: The (human) player is asked to perform one of the familiar gestures (scissors, stone, paper) in front of the camera. The virtual opponent has already randomly determined his gesture at this point. The move is evaluated in real time and the winner is displayed.

The first step: Gesture recognition by means of image processing

But until then, some intermediate steps were necessary. I began by implementing gesture recognition using image processing – new territory for me as a robotics fan. However, with the help of IDS lighthouse – a cloud-based AI vision studio – this was easier to realize than expected. Here, ideas evolve into complete applications. For this purpose, neural networks are trained by application images with the necessary product knowledge – such as in this case the individual gestures from different perspectives – and packaged into a suitable application workflow.

The training process was super easy, and I just used IDS Lighthouse’s step-by-step wizard after taking several hundred pictures of my hands using rock, scissor, or paper gestures from different angles against different backgrounds. The first trained AI was able to reliably recognize the gestures directly. This works for both left- and right-handers with a recognition rate of approx. 95%. Probabilities are returned for the labels „Rock“, „Paper“, „Scissor“, or „Nothing“. A satisfactory result. But what happens now with the data obtained?

Further processing

The further processing of the recognized gestures could be done by means of a specially created vision app. For this, the captured image of the respective gesture – after evaluation by the AI – must be passed on to the app. The latter „knows“ the rules of the game and can thus decide which gesture beats another. It then determines the winner. In the first stage of development, the app will also simulate the opponent. All this is currently in the making and will be implemented in the next step to become a „Rock, Paper, Scissors“-playing robot.

From play to everyday use

At first, the project is more of a gimmick. But what could come out of it? A gambling machine? Or maybe even an AI-based sign language translator?

To be continued…

uEye+ Warp10 cameras from IDS combine high speed and high resolution

See more, see better! New 10GigE cameras with onsemi XGS sensors up to 45 MP

In industrial automation, the optimisation of processes is often primarily about higher efficiency and accuracy. 10GigE cameras, such as those in the uEye Warp10 camera family from IDS Imaging Development Systems GmbH, set standards here. They enable high-speed image processing in Gigabit Ethernet-based networks even with large amounts of data and over long cable distances. For even more precision, the company is now introducing new models with sensors up to 45 MP that reliably capture even the smallest details.

The new industrial cameras are equipped with the onsemi global shutter sensors XGS20000 (20 MP, 1.3″), XGS30000 (30 MP, 1.5″) and XGS45000 (45 MP, 2″). They are primarily used in high-precision quality assurance tasks when motion blur needs to be minimised and data needs to be quickly available on the network. The 10GigE cameras offer up to ten times the transmission bandwidth of 1GigE cameras and are about twice as fast as cameras with USB3 interfaces.

Accuracy and speed go hand in hand when it comes to these models. This has advantages for many applications, e.g. in inspection systems for status and end checks at production lines with high cycle rates – such as semiconductor or solar panel inspection. Users also benefit from the fact that even large scenes and image sections can be precisely monitored and evaluated with these cameras. This proves its worth, for example, in logistics tasks for incoming goods and in the warehouse.

The large format onsemi XGS sensors require correspondingly large optics. Therefore, unlike the previous uEye+ Warp10 models, they are equipped with a TFL mount (M35x0.75). For secure mounting, TFL lenses can be firmly screwed to the cameras. The flange focal distance is standardised and, at 17.526 mm, the same as for the previously available cameras with C-mount. To ensure optimal image quality, IDS recommends the use of Active Heat Sinks. They can be mounted both on and under the models, reduce the operating temperature and are optionally available as accessories.

More information: https://en.ids-imaging.com/ueye-warp10.html

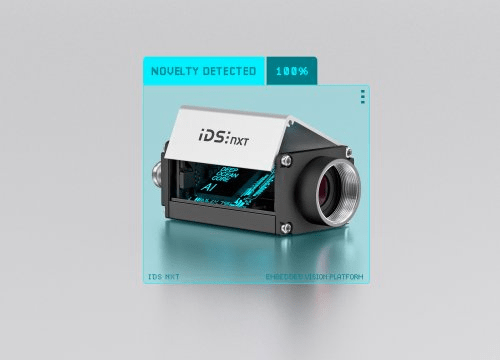

Free update makes third deep learning method available for IDS NXT

Update for the AI system IDS NXT: cameras can now also detect anomalies

In quality assurance, it is often necessary to reliably detect deviations from the norm. Industrial cameras have a key role in this, capturing images of products and analysing them for defects. If the error cases are not known in advance or are too diverse, however, rule-based image processing reaches its limits. By contrast, this challenge can be reliably solved with the AI method Anomaly Detection. The new, free IDS NXT 3.0 software update from IDS Imaging Development Systems makes the method available to all users of the AI vision system with immediate effect.

The intelligent IDS NXT cameras are now able to detect anomalies independently and thereby optimise quality assurance processes. For this purpose, users train a neural network that is then executed on the programmable cameras. To achieve this, IDS offers the AI Vision Studio IDS NXT lighthouse, which is characterised by easy-to-use workflows and seamless integration into the IDS NXT ecosystem. Customers can even use only „GOOD“ images for training. This means that relatively little training data is required compared to the other AI methods Object Detection and Classification. This simplifies the development of an AI vision application and is well suited for evaluating the potential of AI-based image processing for projects in the company.

Another highlight of the release is the code reading function in the block-based editor. This enables IDS NXT cameras to locate, identify and read out different types of code and the required parameters. Attention maps in IDS NXT lighthouse also provide more transparency in the training process. They illustrate which areas in the image have an impact on classification results. In this way, users can identify and eliminate training errors before a neural network is deployed in the cameras.

IDS NXT is a comprehensive AI-based vision system consisting of intelligent cameras plus software environment that covers the entire process from the creation to the execution of AI vision applications. The software tools make AI-based vision usable for different target groups – even without prior knowledge of artificial intelligence or application programming. In addition, expert tools enable open-platform programming, making IDS NXT cameras highly customisable and suitable for a wide range of applications.

More information: www.ids-nxt.com

Market launch: New Ensenso N models for 3D and robot vision

Upgraded Ensenso 3D camera series now available at IDS

Resolution and accuracy have almost doubled, the price has remained the same – those who choose 3D cameras from the Ensenso N series can now benefit from more advanced models. The new stereo vision cameras (N31, N36, N41, N46) can now be purchased from IDS Imaging Development Systems.

The Ensenso N 3D cameras have a compact housing (made of aluminium or plastic composite, depending on the model) with an integrated pattern projector. They are suitable for capturing both static and moving objects. The integrated projector projects a high-contrast texture onto the objects in question. A pattern mask with a random dot pattern complements non-existing or only weakly visible surface structures. This allows the cameras to deliver detailed 3D point clouds even in difficult lighting conditions.

With the Ensenso models N31, N36, N41 and N46, IDS is now launching the next generation of the previously available N30, N35, N40 and N45. Visually, the cameras do not differ from their predecessors. They do, however, use a new sensor from Sony, the IMX392. This results in a higher resolution (2.3 MP instead of 1.3 MP). All cameras are pre-calibrated and therefore easy to set up. The Ensenso selector on the IDS website helps to choose the right model.

Whether firmly installed or in mobile use on a robot arm: with Ensenso N, users opt for a 3D camera series that provides reliable 3D information for a wide range of applications. The cameras prove their worth in single item picking, for example, support remote-controlled industrial robots, are used in logistics and even help to automate high-volume laundries. IDS provides more in-depth insights into the versatile application possibilities with case studies on the company website.

Learn more: https://en.ids-imaging.com/ensenso-3d-camera-n-series.html

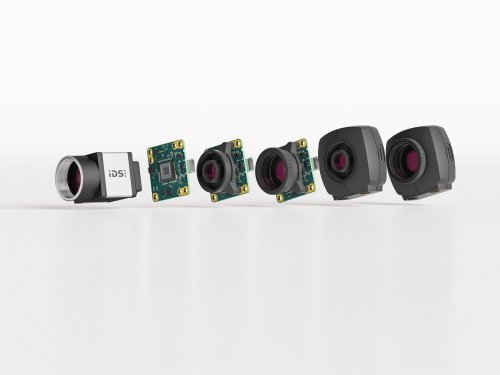

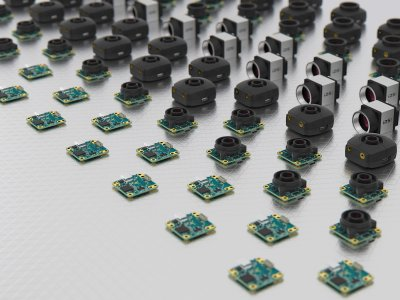

Quickly available in six different housing variants | IDS adds numerous new USB3 cameras to its product range

Anyone who needs quickly available industrial cameras for image processing projects is not faced with an easy task due to the worldwide chip shortage. IDS Imaging Development Systems GmbH has therefore been pushing the development of alternative USB3 hardware generations with available, advanced semiconductor technology in recent months and has consistently acquired components for this purpose. Series production of new industrial cameras with USB3 interface and Vision Standard compatibility has recently started. In the CP and LE camera series of the uEye+ product line, customers can choose the right model for their applications from a total of six housing variants and numerous CMOS sensors.

The models of the uEye CP family are particularly suitable for space-critical applications thanks to their distinctive, compact magnesium housing with dimensions of only 29 x 29 x 29 millimetres and a weight of around 50 grams. Customers can choose from global and rolling shutter sensors from 0.5 to 20 MP in this product line. Those who prefer a board-level camera instead should take a look at the versatile uEye LE series. These cameras are available with coated plastic housings and C-/CS-mount lens flanges as well as board versions with or without C-/CS-mount or S-mount lens connections. They are therefore particularly suitable for projects in small device construction and integration in embedded vision systems. IDS initially offers the global shutter Sony sensors IMX273 (1.6 MP) and IMX265 (3.2 MP) as well as the rolling shutter sensors IMX290 (2.1 MP) and IMX178 (6.4 MP). Other sensors will follow.

The USB3 cameras are perfectly suited for use with IDS peak thanks to the vision standard transport protocol USB3 Vision®. The Software Development Kit includes programming interfaces in C, C++, C# with .NET and Python as well as tools that simplify the programming and operation of IDS cameras while optimising factors such as compatibility, reproducible behaviour and stable data transmission. Special convenience features reduce application code and provide an intuitive programming experience, enabling quick and easy commissioning of the cameras.

Learn more: https://en.ids-imaging.com/news-article/usb3-cameras-series-production-launched.html