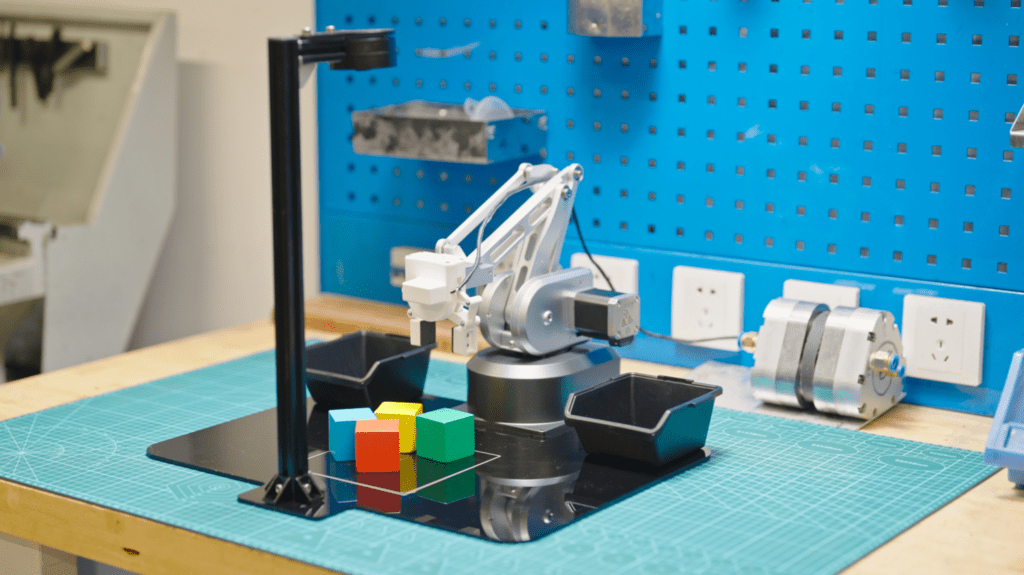

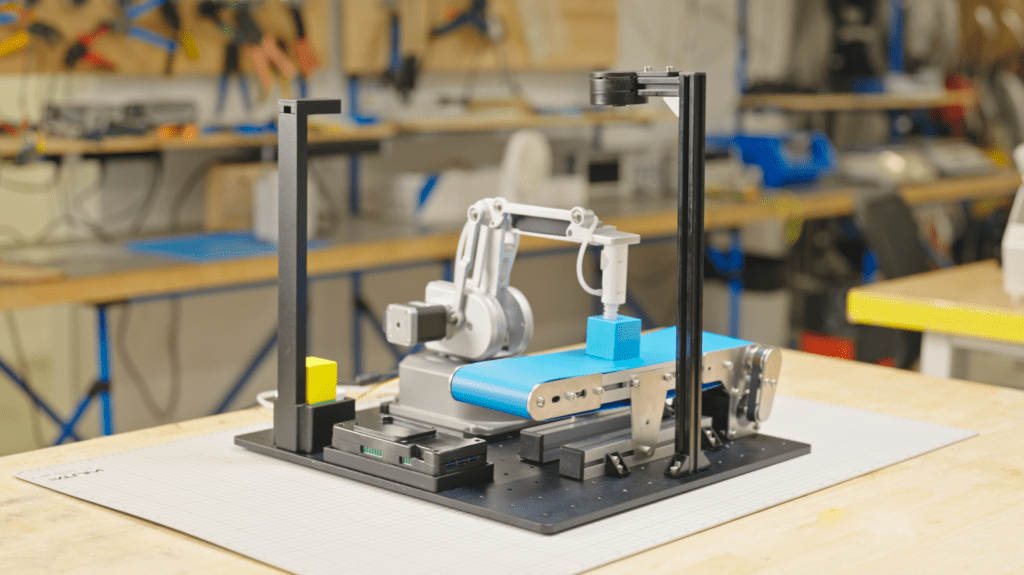

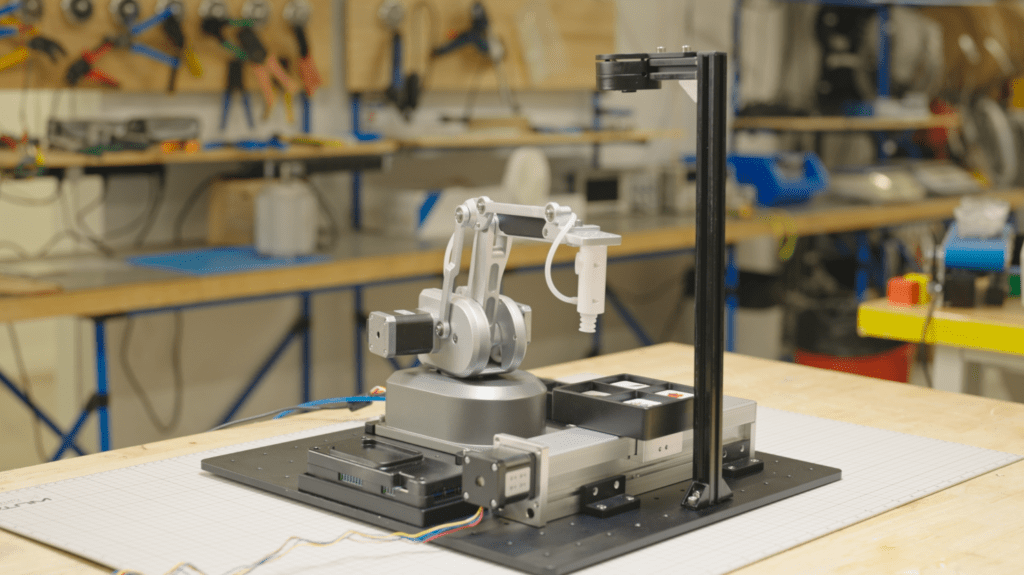

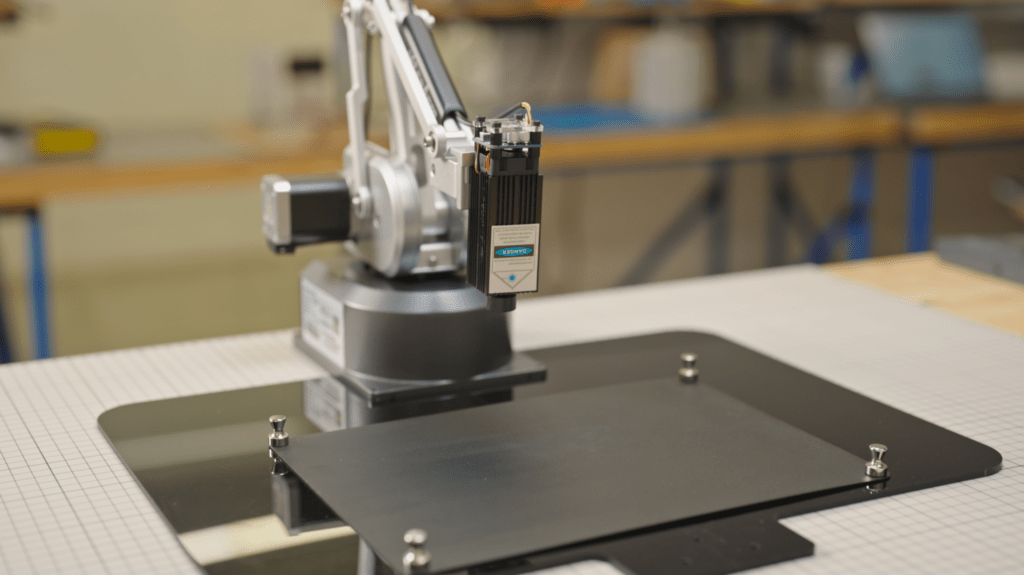

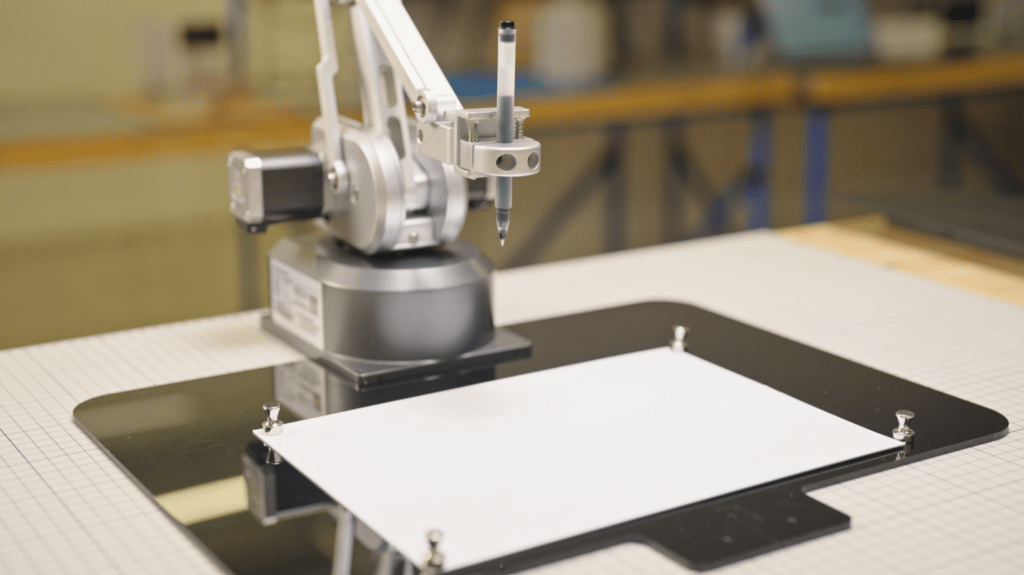

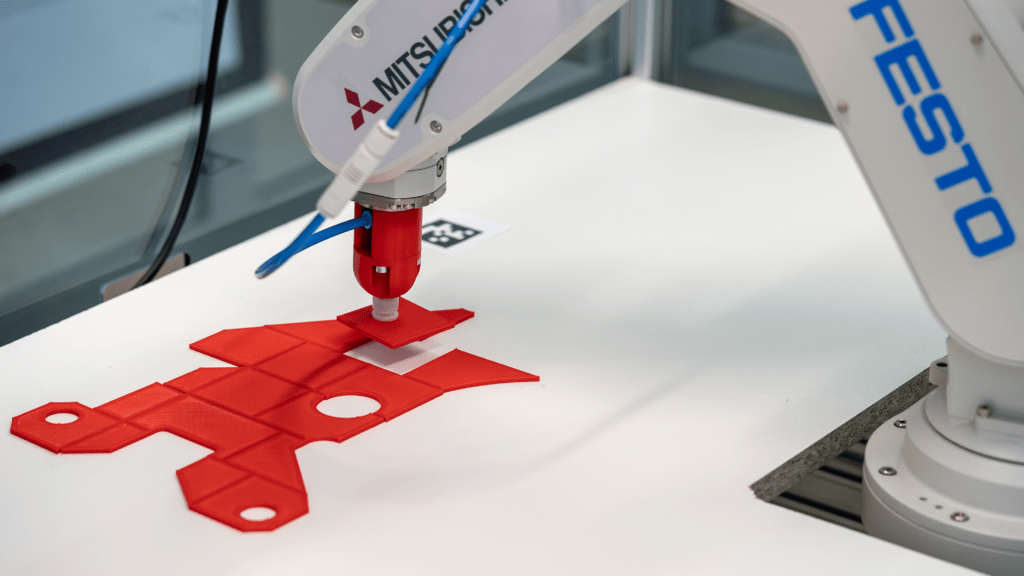

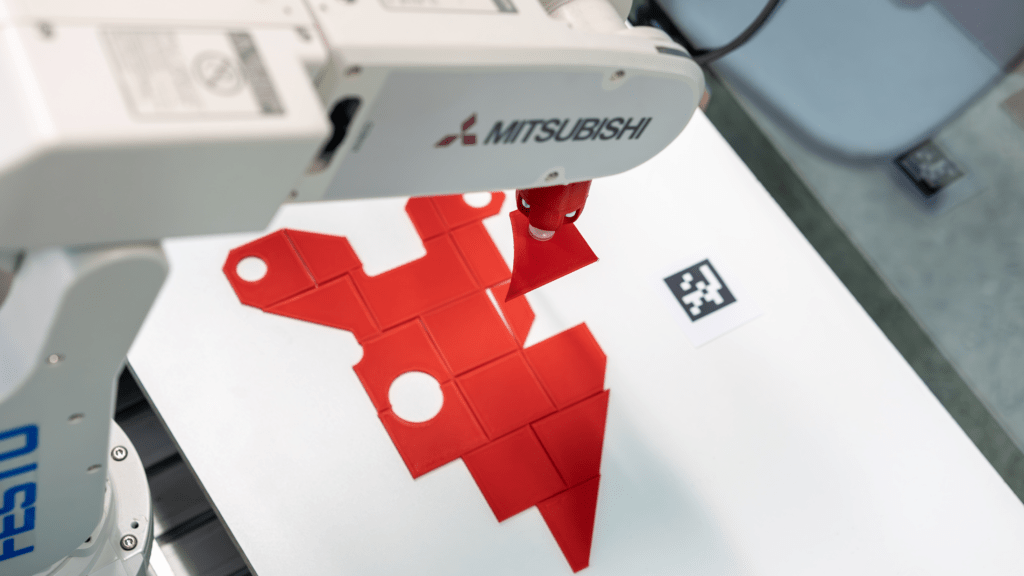

Pick-and-Place-Anwendungen sind ein zentrales Einsatzgebiet der Robotik. Sie werden häufig in der Industrie genutzt, um Montageprozesse zu beschleunigen und manuelle Tätigkeiten zu reduzieren – ein spannendes Thema für Informatik Masteranden des Instituts für datenoptimierte Fertigung der Hochschule Kempten. Sie entwickelten einen Roboter, der Prozesse durch den Einsatz von künstlicher Intelligenz und Computer Vision optimiert. Auf Basis einer Montagezeichnung ist das System in der Lage, einzelne Bauteile zu greifen und an vorgegebener Stelle abzulegen – vergleichbar mit einem Puzzle. Anschließend können die Teile dort manuell durch einen Mitarbeiter verklebt werden.

Zwei IDS Industriekameras liefern die nötigen Bildinformationen

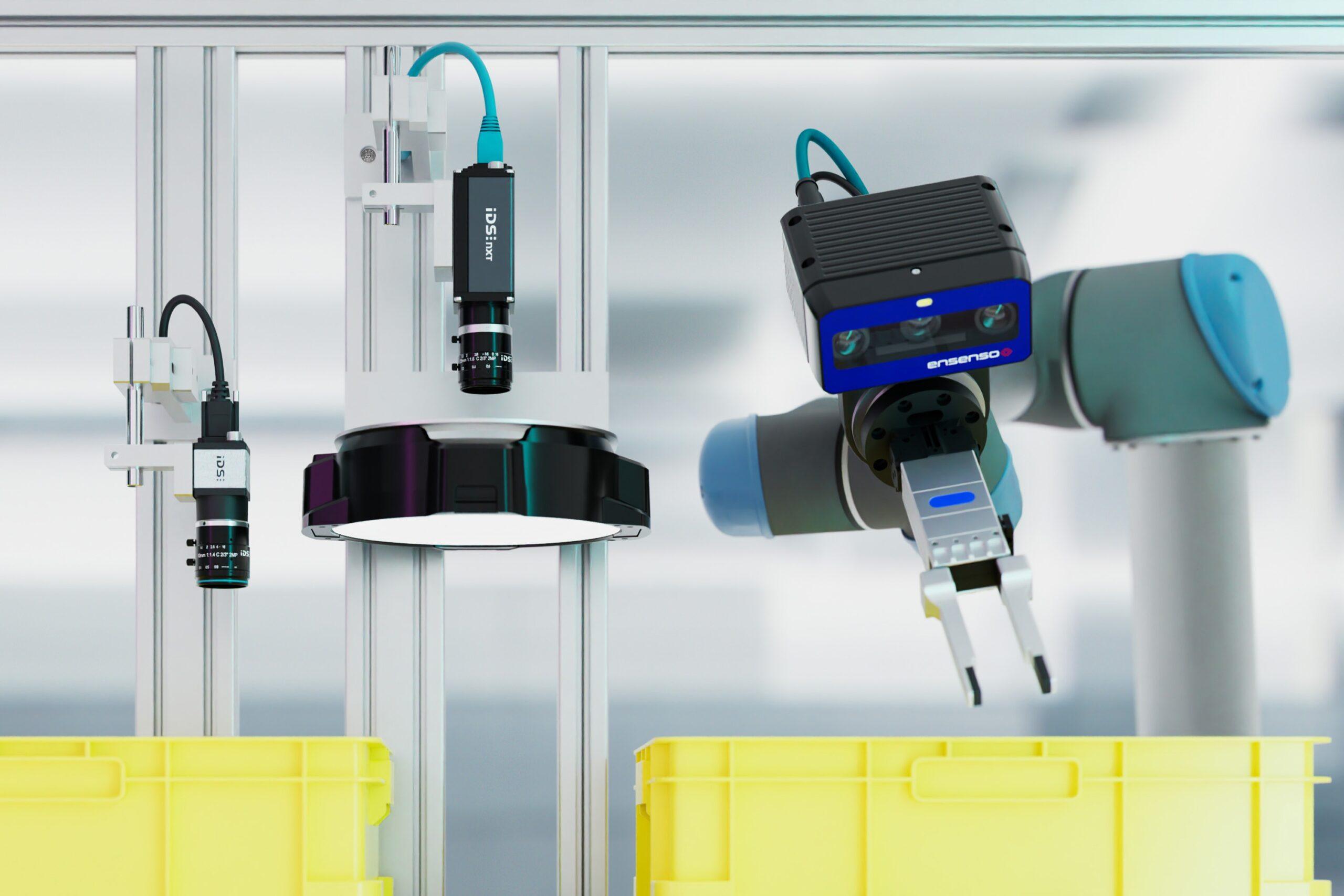

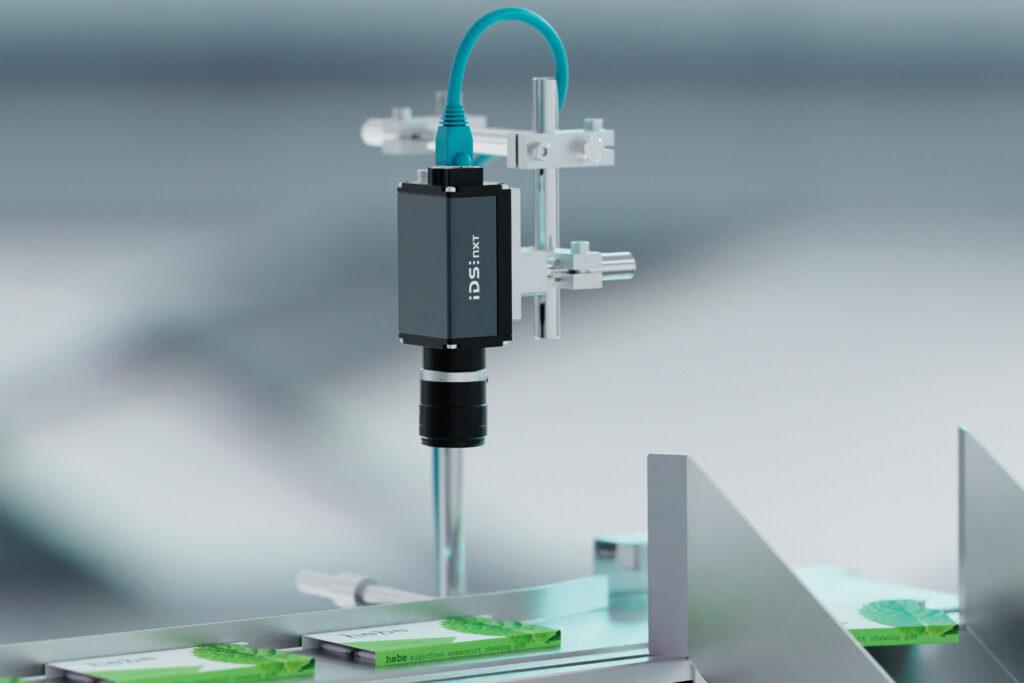

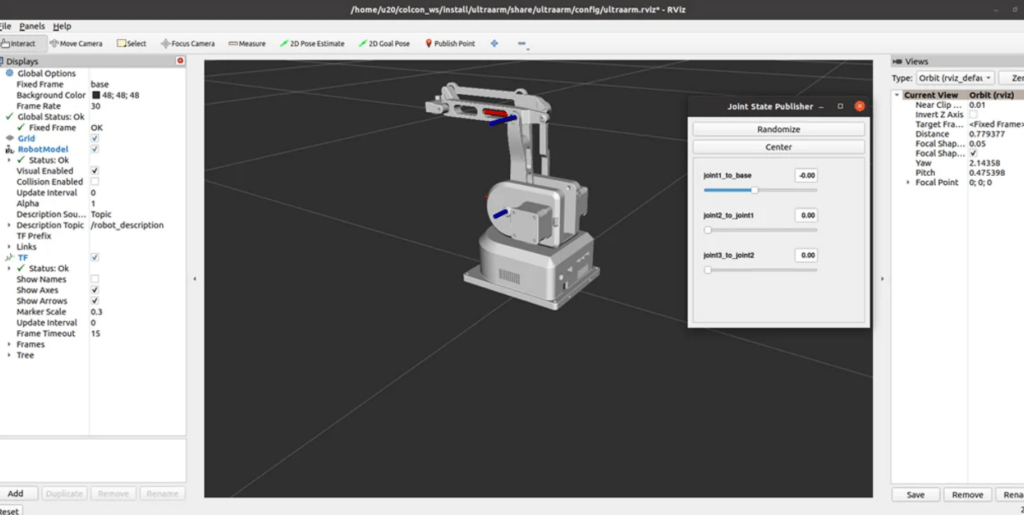

Mithilfe von zwei uEye XC Kameras und einer KI-gestützten Bildverarbeitung analysiert das System die Umgebung und berechnet präzise Aufnahme- sowie Ablagekoordinaten. Eine der Kameras wurde dazu über der Arbeitsfläche platziert, die andere über der Entnahmestelle. Konkret verarbeitet eine KI-Pipeline die Bilder der beiden Kameras in mehreren Schritten, um die exakte Lage und Ausrichtung der Objekte zu bestimmen. Mithilfe der Computer-Vision-Algorithmen und neuronalen Netzen erkennt das System relevante Merkmale, berechnet die optimalen Greifpunkte und generiert präzise Koordinaten für die Aufnahme und Ablage der Objekte. Zudem identifiziert das System die Teile eindeutig, indem es ihre Oberfläche segmentiert und die Konturen mit einer Datenbank abgleicht. Darüber hinaus nutzt es die Ergebnisse, um eine Annäherung an bereits abgelegte Teile zu ermöglichen. Die Automatisierungslösung reduziert damit die Abhängigkeit von Expertenwissen, verkürzt Prozesszeiten und wirkt dem Fachkräftemangel entgegen.

Kameraanforderungen

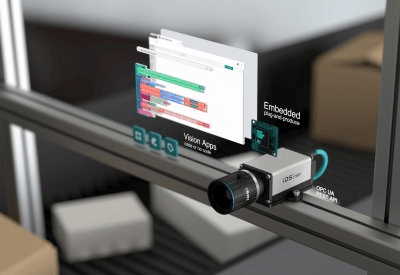

Schnittstelle, Sensor, Baugröße und Preis waren die Kriterien, die für die Wahl des Kameramodells entscheidend waren. Die uEye XC kombiniert die Benutzerfreundlichkeit einer Webcam mit der Leistungsfähigkeit einer Industriekamera. Sie erfordert lediglich eine Kabelverbindung für den Betrieb. Ausgestattet mit einem 13-MP-onsemi-Sensor (AR1335) liefert die Autofokus-Kamera hochauflösende Bilder und Videos. Eine wechselbare Makro-Aufsatzlinse ermöglicht eine verkürzte Objektdistanz, wodurch die Kamera auch für Nahbereichsanwendungen geeignet ist. Auch ihre Einbindung war denkbar einfach, wie Raphael Seliger, Wissenschaftlicher Mitarbeiter der Hochschule Kempten, erklärt: „Wir binden die Kameras über die IDS peak Schnittstelle an unser Python Backend an.“

Ausblick

Zukünftig soll das System durch Reinforcement Learning weiterentwickelt werden – einer Methode des maschinellen Lernens, die auf Lernen durch Versuch und Irrtum beruht. „Wir möchten gerne die KI-Funktionen ausbauen, um die Pick-and-Place Vorgänge intelligenter zu gestalten. Unter Umständen benötigen wir dafür eine zusätzliche Kamera direkt am Roboterarm“, erläutert Seliger. Geplant ist zudem eine automatische Genauigkeitsprüfung der abgelegten Teile. Langfristig soll der Roboter allein anhand der Montagezeichnung alle erforderlichen Schritte eigenständig ausführen können.