Voice assistants have become a crucial component of our everyday lives in today’s technologically sophisticated society. They assist us with work, respond to our inquiries, and even provide entertainment. Have you ever wondered how voice assistants operate or how to build your own? Spencer is here to satisfy your curiosity and provide a fun DIY activity, so stop searching. This blog post will introduce you to Spencer, a voice assistant that will brighten your day with jokes and provide you with all the information you need.

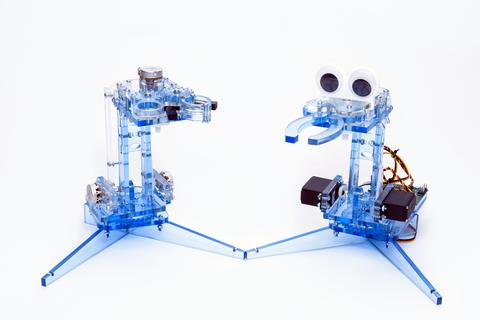

Meet Spencer

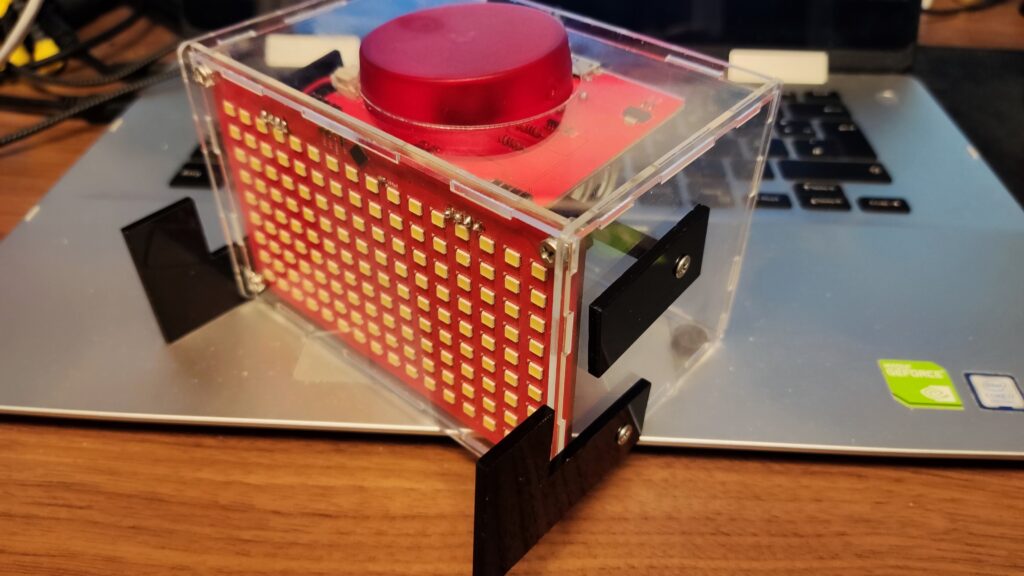

Spencer is a buddy that converses with you; it is more than simply a voice assistant. It can hear you well enough to comprehend all you say. It uses its large red button as a trigger to search the internet and give you straightforward answers. It’s a wonderful addition to your everyday routine because of Spencer’s endearing nature and capacity to make you grin.

Spencer’s Features: Your Interactive Voice Assistant Companion

1. Voice Interaction

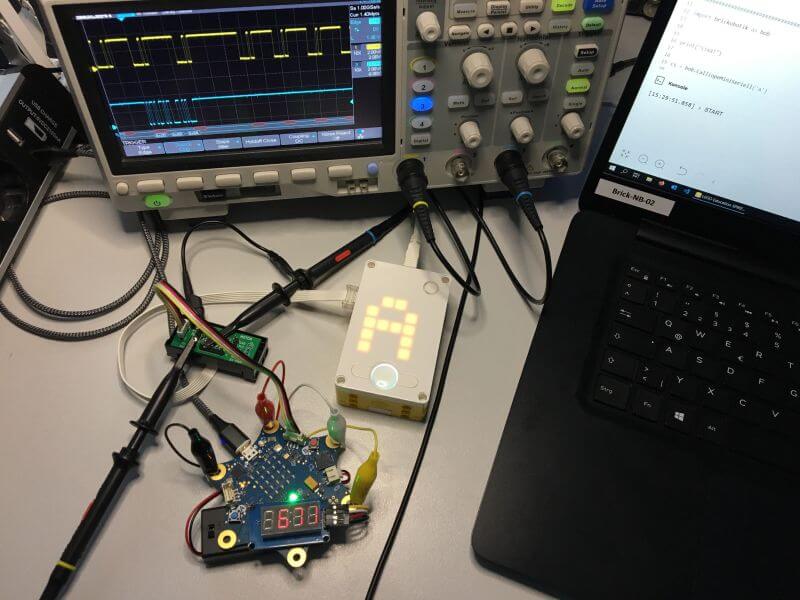

High-quality audio communication is possible because of Spencer’s microphone. It comprehends your instructions, inquiries, and chats and offers a simple and straightforward approach for you to communicate with your voice assistant. Simply talk to Spencer, and it will answer as you would expect, giving the impression that you are conversing with a genuine friend.

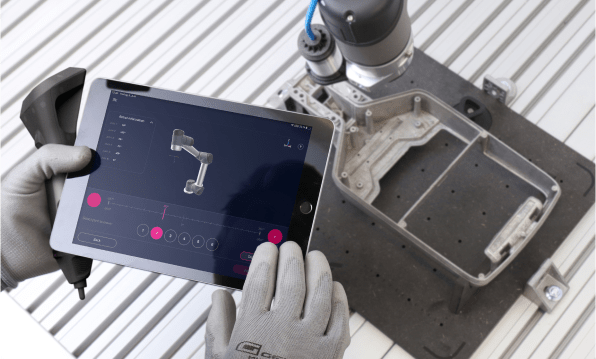

2. Internet Connectivity and Information Retrieval

Spencer has internet access, allowing you to access a huge information base. You may have Spencer do a real-time internet search by pushing the huge red button on his chest. Spencer can search the web and provide you clear, succinct answers, whether you need to discover the solution to a trivia question, check the most recent news headlines, or collect information on a certain issue.

3. Personalization and Customization

Being wholly original is what Spencer is all about. You are allowed to alter its features and reactions to fit your tastes. Make Spencer reflect your style and personality by altering its external elements, such as colors, decals, or even adding accessories. To further create a genuinely customized experience, you may alter its reactions, jokes, and interactions to suit your sense of humor and personal tastes.

4. Entertainment and Engagement

Spencer is aware of how important laughing is to life. It has built-in jokes and amusing replies, so talking to your voice assistant is not only educational but also interesting and fun. Spencer’s amusing features will keep you entertained and involved whether you need a quick pick-me-up or want to have a good time with friends and family.

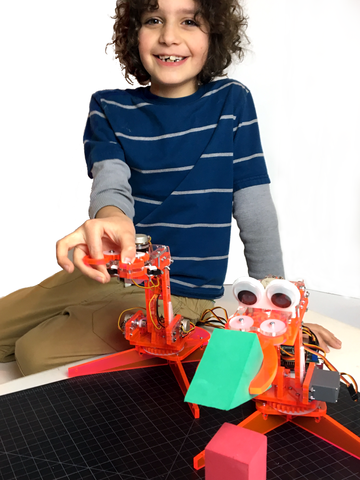

5. Learning and Educational STEM Experience

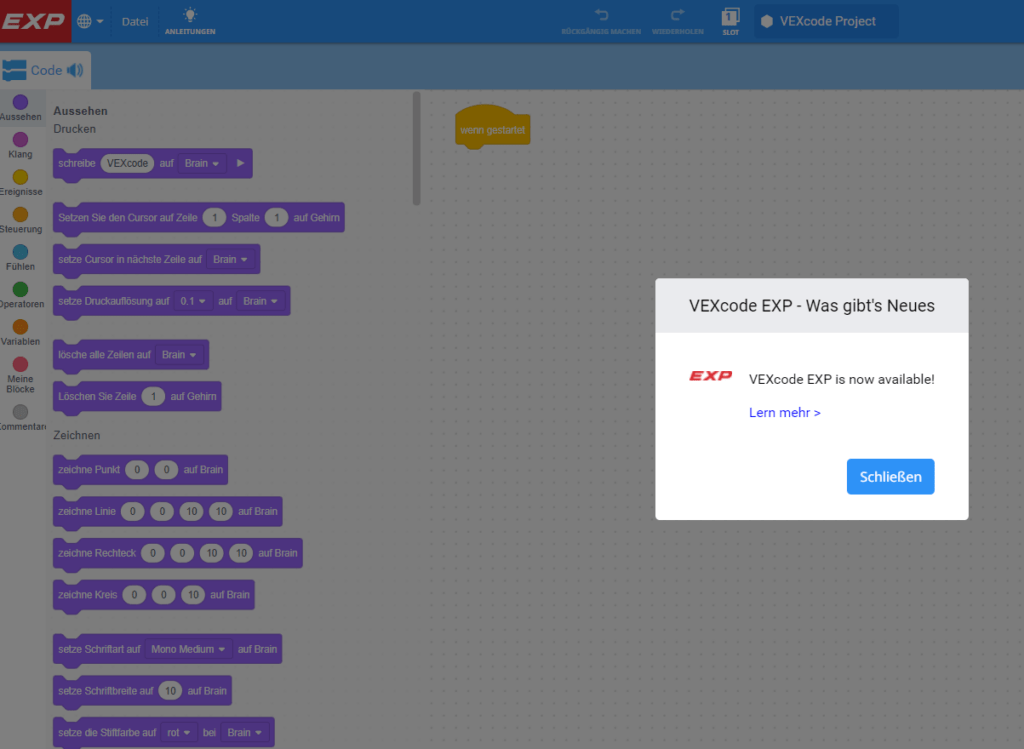

In particular, STEM (science, technology, engineering, and mathematics) subjects are the focus of Spencer’s educational mission. You will learn useful skills in electronics, soldering, component assembly, and circuits by making Spencer. To further develop Spencer’s talents, you may go into programming, gaining practical experience with coding and computational thinking.

6. Inspiration and Creativity

Spencer acts as a springboard to spark your imagination and motivate further investigation. You may let your creativity run wild as you put together and customize your voice assistant. This do-it-yourself project promotes critical thinking, problem-solving, and invention, developing a creative and innovative mentality that may go beyond the context of making Spencer.

Recommended Age Group

Spencer is intended for those who are at least 11 years old. While the majority of the assembly procedures are simple, some, like soldering and tightening fasteners, call for prudence. Never be afraid to seek an adult for help if you need it. When using certain equipment and approaches, it is usually preferable to be guided.

Assembly Time Required

The construction of Spencer should take, on average, 4 hours to finish. However, take in mind that the timeframe may change based on your prior knowledge and expertise. Don’t worry if you’re unfamiliar with electronics! Enjoy the process, take your time, and don’t let any early difficulties get you down. You’ll grow more used to the procedures as you go along.

Skills Required

To start this DIY project, no special skills are needed. Fun and learning something new are the key goals. Your introduction to the field of electronics via Building Spencer will pique your interest in STEM fields and provide you the chance to get hands-on experience. Consider completing this assignment as the first step towards a lucrative engineering career.

Pros and Cons of Spencer

Pros of Spencer

- Spencer provides an engaging and interactive experience, responding to voice commands and engaging in conversations to make you feel like you have a real companion.

- With internet connectivity, Spencer can retrieve information in real-time, giving you quick answers to your questions and saving you time.

- Spencer can be customized to reflect your style and preferences, allowing you to personalize its appearance, responses, and interactions.

- Spencer comes with built-in jokes and entertaining responses, adding fun and amusement to your interactions with the voice assistant.

- Building Spencer provides hands-on learning in electronics, soldering, circuitry, and programming, offering a valuable educational experience in STEM disciplines.

Cons of Spencer

- The assembly process of Spencer may involve technical aspects such as soldering and component assembly, which can be challenging for beginners or individuals with limited experience.

- Spencer heavily relies on internet connectivity to provide real-time answers and retrieve information, which means it may have limited functionality in areas with poor or no internet connection.

- While Spencer offers basic voice assistant features, its capabilities may be more limited compared to advanced commercially available voice assistant devices.

Conclusion

Spencer, creating your own voice assistant is a fascinating and worthwhile endeavor. You’ll learn useful skills, expand your understanding of electronics, and enjoy the thrill of putting a complicated gadget together as you go along with the assembly process. Remember that the purpose of this project is to experience the thrill of learning, solving problems, and letting your imagination run free as well as to produce a final product. So be ready to join Spencer on this journey and discover a world of opportunities in the exciting world of voice assistants.

Get your own Spencer Building kit here: bit.ly/RobotsBlog